What are the best load balancing methods and algorithms?

Comparisons Published on •11 mins Last updatedThis kind of question was asked a lot more frequently ten years ago, usually followed by the second most common question: "Why do I need two load balancers?" In fact, we were asked the second question so often that we used it as the title on our homepage for ages. It amazed me that people would go to all the effort of configuring a cluster of multiple application servers but only use ONE load balancer!

These days, people understand a lot more about high-availability best practices and patterns (N+1 redundancy, for example). The primary function of a load balancer is to keep your application running with no downtime. It is an invaluable tool for systems architects, and it has the benefit of being pretty simple to understand.

Before I continue discussing the fundamentals of load balancing methodology, are you actually looking for a relatively unbiased comparison of the best software load balancers?

If you're still with me, let's look at the basics.

What is a load balancer?

A load balancer is simply a device that sends internet traffic to a group of application servers with an even load distribution. Their key advantage over DNS is that they can ensure the server is responding and that it's actually giving the correct response! You'll find further clarification in our 'What is a load balancer?' blog.

Before load balancers we used DNS servers with multiple A records. The trouble with DNS was the lack of health checks on servers - so if the hard drive failed on one of your servers, clients would still be directed to it.

Why do you need two load balancers?

These days, systems engineers have a much better grasp of fundamental high-availability requirements. If your load balancer fails then your customers will be very upset. So you definitely want a couple of them in a clustered pair.

Lori MacVittie (who is much better at writing than me) has an excellent explanation.

Most of our customers have at least three load balancers - preferably five or more. Otherwise they couldn't run a full development and testing environment before moving to production.

What makes Loadbalancer.org different?

The Loadbalancer.org appliance is one of the most flexible load balancers on the market. The design allows different load balancing modules to utilize the core high availability framework. Multiple load balancing methods can be used at the same time, or in combination with each other.

Which methods and algorithms are best?

All load balancers (Application Delivery Controllers) use the same load balancing methods. It's very common for people to choose a particular method because it is what they were told to do, rather than because it's actually right for their application.

Load balancers traditionally use a combination of routing-based OSI Layer 2/3/4 techniques (generally referred to as Layer 4 load balancing). All modern load balancers also support layer 7 techniques (full application reverse proxy). However, just because the number is bigger, that doesn't mean it's a better solution for you! 7 blades on your razor aren't necessarily better than 4.

|

Layer 4 DR (Direct Routing) |

Ultra-fast local server based load balancing. Requires handling the ARP issue on the real servers. |

|

Layer 4 NAT (Network Address Translation) |

Fast Layer 4 load balancing. The appliance becomes the default gateway for the real servers. |

|

Layer 4 TUN |

Similar to DR but works across. IP encapsulated tunnels. |

|

Layer 4 LVS-SNAT |

You can configure layer 4 to act as a reverse proxy, using IPTables rules. Very useful if you need to proxy UDP traffic. |

|

Layer 7 SSL Termination |

Usually required in order to process cookie persistence in HTTPS streams on the load balancer. Processor intensive. |

|

Layer 7 SNAT (HAProxy) |

Layer 7 allows great flexibility including full SNAT and WAN load balancing, HTTP or RDP cookie insertion and URL switching. |

Direct Routing (DR) load balancing method

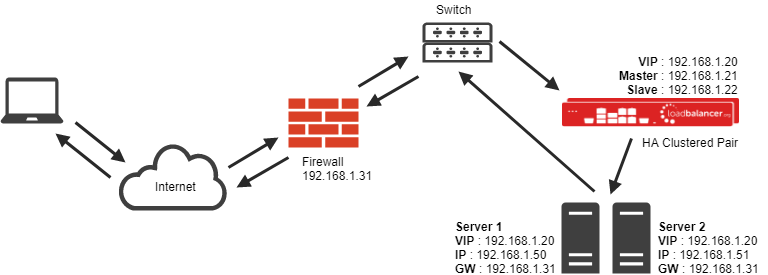

The one-arm direct routing (DR) mode is recommended for Loadbalancer.org installations because it's a very high performance solution requiring little change to your existing infrastructure. (NB. Foundry networks call this Direct Server Return and F5 call it N-Path.)

- Direct routing works by changing the destination MAC address of the incoming packet on the fly, which is very fast.

- However, this means that when the packet reaches the real server it expects it to own the VIP. You need to make sure the real server responds to the VIP but does not respond to ARP requests.

- On average, DR mode is 8x quicker than NAT for HTTP, 50x quicker for terminal services and much, much faster for streaming media or FTP.

- Direct routing mode enables servers on a connected network to access either the VIPs or RIPs. No extra subnets or routes are required on the network.

- The real server must be configured to respond both to the VIP and its own IP address.

- Port translation is not possible in DR mode, so you should have a different RIP port than the VIP port.

When using a load balancer in one-arm DR mode all load balanced services can be configured on the same subnet as the real servers. The real servers must be configured to respond to the virtual server IP address as well as their own IP address.

Often ADC vendors will give a long list of problems with DSR (Direct Server Return) load balancing. But it's normally because they are trying to sell you features that a properly designed application like Exchange 2013 should not need. Surprisingly enough, they'll tell you that you need the most expensive load balancer model in order to use the unnecessary feature they are recommending. One example which always makes me laugh is the Kemp Technologies sizing tool for Exchange 2013, which Microsoft specifically designed so that you don't need to terminate SSL on the load balancer. But Kemp insist on enabling SSL termination by default. This means that practically ANY variable you change in the sizing tool recommends the most expensive load balancers!

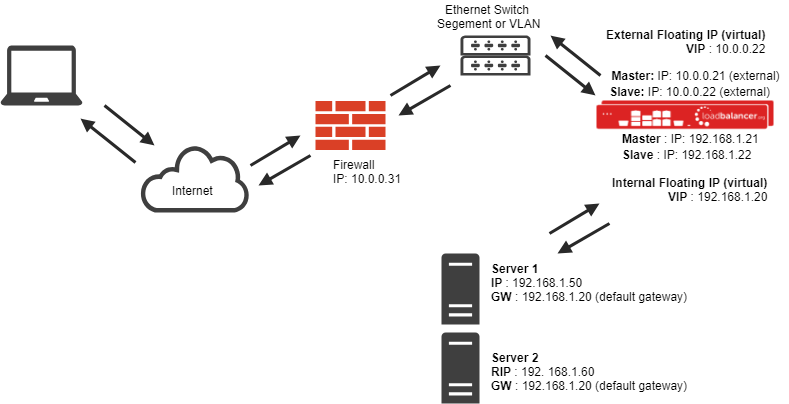

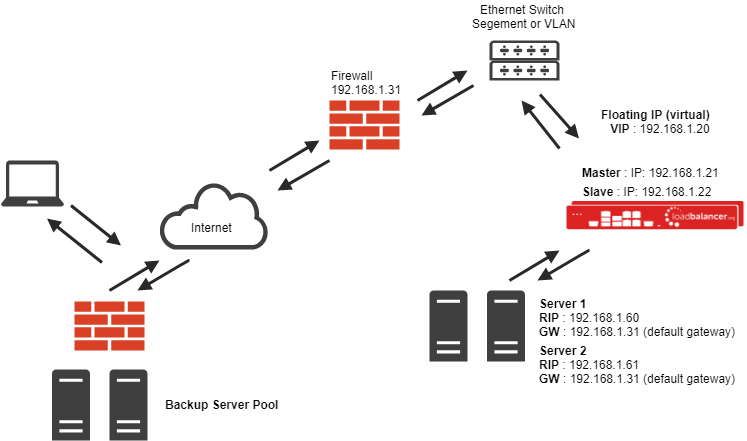

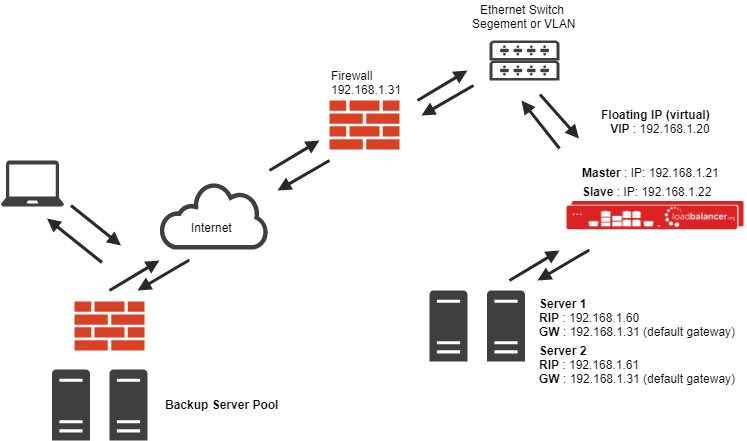

Network Address Translation (NAT) load balancing method - two-arm

Sometimes it isn't possible to use DR mode - most commonly, because the application cannot bind to RIP and VIP at the same time, or because the host operating system cannot be modified to handle the ARP issue. The best choice in these cases would be Network Address Translation (NAT) mode.

This is also a fairly high-performance solution, but it requires the implementation of a two-arm infrastructure with an internal and external subnet to carry out the translation (the same way a firewall works). Network engineers with experience of hardware load balancers will often have used this method.

- In two-arm NAT mode the load balancer translates all requests from the external virtual server to the internal real servers.

- The real servers must have their default gateway configured to point at the load balancer.

- For the real servers to be able to access the internet on their own, the setup wizard automatically adds the required MASQUERADE rule in the firewall script (some vendors incorrectly call this S-NAT).

- If you want real servers to be accessible on their own IP address for non-load balanced services, i.e. SMTP, you will need to set up individual SNAT and DNAT firewall script rules for each real server. Or you can set up a dedicated virtual server with just one real server as the target.

- Please see the advanced NAT considerations section of our administration manual for more details on these two issues.

When using a load balancer in two-arm NAT mode, all load balanced services can be configured on the external IP. The real servers must also have their default gateways directed to the internal IP. You can also configure the load balancers in one-arm NAT mode, but in order to make the servers accessible from the local network you need to change some routing information on the real servers.

To be honest, we at Loadbalancer.org have always found two-arm mode a pain in the neck to support and configure. It always involves network downtime as you play with configurations and try to get new subnets, VLANS and even DMZs talking to each other. Once, we had a bank screw this up on a live website - and it was not pretty.

The only reason you usually can't do NAT in one-arm mode (same subnet) is because all of your local servers won't be able to talk to the load balanced cluster unless they change their routing table to use the load balancer as a default gateway. (This is very difficult on Windows Server, but that's another story.) If, however, all of the clients accessing the cluster are on a different network (i.e, the Internet) then one-arm NAT mode is great!

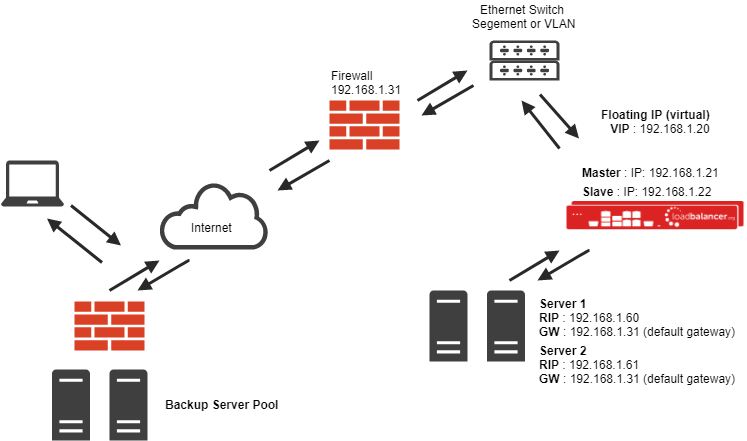

Network Address Translation (NAT) load balancing method - one-arm

Now that a lot of our customers are moving to the Amazon cloud with our AWS based load balancer, we are getting a lot more configurations in one-arm NAT mode. When you are using AWS, most of the time your clients are on a different network (i.e, the Internet) so any return traffic is always routed correctly.

Configuring your servers in AWS can be a real mind-bender, but once you get the hang of it, it becomes really easy. I'm a bit rusty so I virtually always have to go and look at Robert Cooper's excellent documentation.

One-arm NAT mode is a fast, flexible and easy way to deliver transparent load balancing for your application. The only drawback is the local routing issue.

We use it heavily in the Amazon cloud, because you can't use DR mode there.

Why choose a Layer 7 Reverse Proxy?

I've writen some fairly agressive blogs in the past about problems with layer 7 reverse proxies. But technology has moved on rapidly, and performance is no longer a problem. They are also really simple to understand and implement, so I often recommend them as a good place to start.

I have 5 scenarios where you might have to use Layer 7 Reverse Proxy mode:

- You need to route traffic to servers on different networks (watch out for high latency!).

- You need some kind of fancy application persistence like cookies.

- You need to do some kind of content inspection and re-routing.

- You need some kind of caching or rate limiting for your servers.

- Um... lots of other things that you should really fix in your application and not on the load balancer...

Source Network Address Translation (SNAT) load balancing method - layer 7

If your application requires the load balancer to handle cookie insertion then you need to use the SNAT configuration. This also has the advantages of a one-arm configuration and does not require any changes to the application servers. However, since the load balancer is acting as a full proxy it doesn't have the same raw throughput as the routing-based methods.

The network diagram for the Layer 7 HAProxy SNAT mode is very similar to the Direct Routing example except that no re-configuration of the real servers is required. The load balancer proxies the application traffic to the servers so that the source of all traffic becomes the load balancer.

- As with other modes, a single unit does not require a Floating IP.

- SNAT is a full proxy and therefore load balanced servers do not need to be changed in any way.

Because SNAT is a full proxy, any server in the cluster can be on any accessible subnet including across the Internet or WAN. SNAT is not transparent by default, so the real servers will see the source address of each request as the load balancer's IP address. The client's source IP address will be in the X-Forwarded-For for header (see TPROXY method).

NB. Rather than messing around with TPROXY did you know you can load balance based on the X-Forwarded header? (pretty cool eh?)

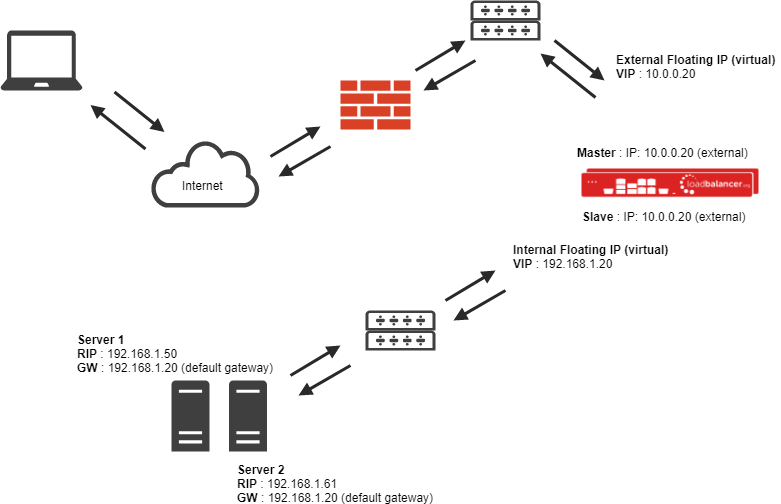

Transparent Source Network Address Translation (SNAT-TPROXY) load balancing method

If the source address of the client is a requirement then HAProxy can be forced into transparent mode using TPROXY. This requires that the real servers use the load balancer as the default gateway (as in NAT mode) and only works for directly attached subnets (as in NAT mode).

- As with other modes a single unit does not require a Floating IP.

- SNAT acts as a full proxy but in TPROXY mode all server traffic must pass through the load balancer.

- The real servers must have their default gateway configured to point at the load balancer.

Transparent proxy is impossible to implement over a routed network (a wide area network such as the Internet). To get transparent load balancing over the WAN you can use the TUN load balancing method (Direct Routing over secure tunnel) with Linux or UNIX based systems only.

SSL Termination or Acceleration (SSL) with or without TPROXY

All of the layer 4 and Layer 7 load balancing methods can handle SSL traffic in pass through mode, where the backend servers do the decryption and encryption of the traffic. This is very scalable as you can just add more servers to the cluster to gain higher Transactions Per Second (TPS). However, if you want to inspect HTTPS traffic in order to read or insert cookies you will need to decode (terminate) the SSL traffic on the load balancer. You can do this by importing your secure key and signed certificate to the load balancer, giving it the authority to decrypt traffic. The load balancer uses standard apache/PEM format certificates.

You can define a Pound/Stunnel SSL virtual server with a single backend - either a Layer 4 NAT mode virtual server or more usually a Layer 7 HAProxy VIP - which can then insert cookies.

Pound/Stunnel-SSL is not transparent by default, so the backend will see the source address of each request as the load balancer's IP address. The client's source IP address will be in the X-Forwarded-For for header. However Pound/Stunnel-SSL can also be configured with TPROXY to ensure that the backend can see the source IP address of all traffic.

LVS Source Network Address Translation (LVS-SNAT) load balancing method - layer 4

Using a special trick with IPTables you can implement LVS-SNAT configuration at layer 4. This also has the advantage of a one-arm configuration and does not require any changes to the application servers. This is incredibly useful for load balancing UDP when you don't need source IP address transparency.

The network diagram for the Layer 4 LVS-SNAT mode is very similar to the Direct Routing example except that no re-configuration of the real servers is required. The load balancer proxies the application traffic to the servers so that the source of all traffic becomes the load balancer.

- As with other modes a single unit does not require a Floating IP.

- LVS-SNAT is a full proxy and therefore load balanced servers do not need to be changed in any way.

Because LVS-SNAT is a full proxy any server in the cluster can be on any accessible subnet, including across the Internet or WAN. SNAT is not transparent by default, so the real servers will see the source address of each request as the load balancer's IP address. You can't use TPROXY with a layer 4 LVS-SNAT configuration.