The explosion of information in our everyday lives has driven the technology market to create newer, faster technologies to cater for the storage and access to the data created.

Applications we use send billions of bits of information across networks each second — and this is driving a need for higher speeds in every aspect of networks.

Ensuring information is in the right place at the right time is critical for almost every business and the path between the devices needs to be able to cater for this. As the amount of information explodes, we need to ensure that the underlying network does not get clogged and create a bottleneck at any point, so that performance is maintained.

As with all technologies, Ethernet has seen huge increases in the speeds available to the market in recent years. How do we choose between the varying technologies and which open the door to the simplest and most cost effective upgrade path?

40G / 100G QSFP infrastructure is mature and has grown a large market share during the past decade. In recent years meanwhile, 25G/50G/100G SFP infrastructure is catching more and more attention, and beginning to become more prevalent in network deployments. These newer Ethernet technologies are not simply designed to set a new higher speed — but also cater to specific market demands and developments.

25GbE technology specifically is catching on quickly in the market, and accordingly, shipments of 25GbE adapters and ports reached 1 million in less than half the time of 10GbE — the technology that, until recently, was most widely used for device and high-performance server and device connectivity.

Why is 25G SFP growing so fast?

25G SFP28 has a similar form factor to the ubiquitous 10G SFP+, it’s backwards compatible, allows dense port configurations and it's generally cheaper than QSFP based infrastructure. Also — It’s incredibly hard to get an application server to run much faster than 25G anyway!

So when do you need faster than 25G?

The obvious reason is for data center connectivity - 25G/50G/100G all have wide applications in cloud data centers now. The new technologies provide a far simpler path for higher speeds with the capability of 10G-25G-50G-100G network upgrades without having to re-plan or change server per rack density. Before the emergence of 25G and 50G, the traditional migration path has been 10G-40G-100G — which is more costly and less space efficient.

By contrast, upgrading from 25G to 100G can be a more cost-effective solution. With a switch infrastructure based on 25G, this migration path can be achieved by 2x50G or 4x25G.

This offers higher port density per switch and performance, both CAPEX (capital expenditures) and OPEX (operational expenditures) savings through high backward compatibility, and reuse of the existing cabling infrastructure. On the whole, the 25G-50G-100G migration path provides a lower cost per unit of bandwidth by fully utilizing switch port capabilities and also lays the foundation for the further upgrade to 200G.

Organizations that have already invested in the QSFP physical format switches will also have an upgrade path from the 40-100-200G format, but the newer technologies may require specialist cabling to implement and the physical size of switch hardware may differ - meaning planning is not as simple.

But what about application level resilience at 25G+?

Another key requirement in most networks is a high level of resilience to meet the always on needs of today’s technology services. At Loadbalancer.org high-availability has always been our number one priority.

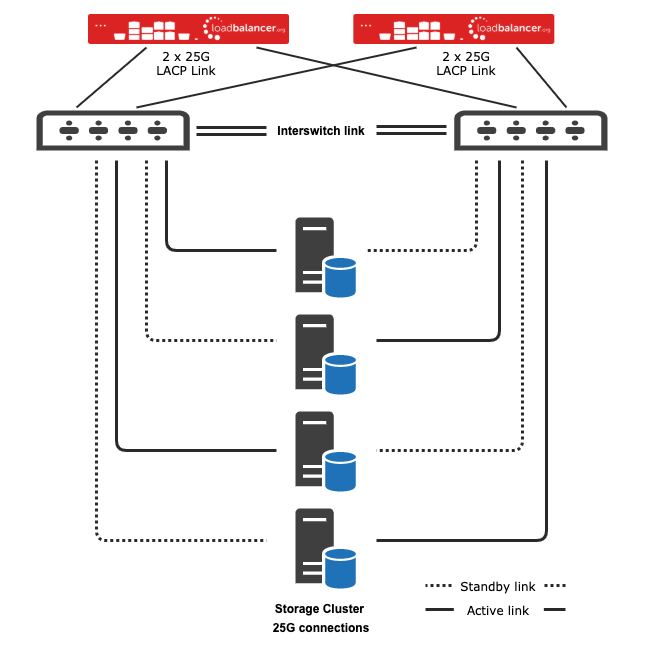

We strongly recommend that all production environments use a dual switch fabric. This ensures that multiple links are all active providing a shared path from the load balancer to the network infrastructure. With multiple links active, the effect of any failure in any of the links is minimised as traffic can still use and share the other link(s).

Our approach to this is to use the Dynamic link aggregation mode which is based on the industry standard 802.3ad Link Aggregation Control Protocol (LACP). This mode requires the load balancer to be connected to a switch that supports the same IEEE 802.3ad standard based features. This mode provides both fault tolerance and load sharing between the interfaces with failure times being totally negated in a lot of cases or being typically significantly sub second.

The typical use case for native 50G or 100G with Loadbalancer.org customers is for large scale high performance object storage systems. Typically you will have up to 10 application servers per site each pushing 10G+ through a single pair of load balancers. Historically most of our customers were using 2x40G for these high throughput systems — but we're currently noticing a big trend towards 2x25G, 4x25G ADC configurations.

So, how do I easily scale bandwidth?

One of the most common methods of catering for increase in speed requirements is to use LACP to increase the number of links in a channel. Depending on the switch which the load balancer is connecting to it is often possible to create channels with up to 8 (or occasionally more) links.

This method of expansion, although providing an increase in Bandwidth available per channel means that a high number of interfaces are required which can have a negative impact on the number of devices per rack, the additional cost of new switches to cater for the port expansion and the cabling required. Additionally it may not provide any extra resilience if all links in the channel are connected to the same switch device as if the switch fails all links in the channel are lost.

Are you stuck with an ADC that only supports 10G SFP?

Fixed configuration load balancer devices may have little option other than to use this method as no alternative upgrade path is available. This can also impact the choice of upgraded network switch hardware as “legacy” interfaces may be required in order to cater for load balancers which have a fixed port configuration. This can add to the cost of new hardware and in some cases may even require dedicated hardware for the legacy load balancer.

Another concern for many companies who embed load balancer technologies into overall packaged solutions is the need for flexibility of product. As their system requirements grow and expand into new higher speed solutions, the ability of a load balancer to natively cater for these is critical.

At Loadbalancer.org we believe in choice, as such we have recently released new products to meet the needs of any form factor required. We were one of the first vendors to support native 10G and 40G in our ADC range. Working closely with our object storage vendor partners we have simplified our high end product range to include 25G, 50G and 100G, where the 25G is fully backwards compatible with 10GbE. Read more about it in this blog.

As new modules are available in each form factor (50G in SFP56, 100G in SFP-DD), we will strive to have them included in the range as soon as is practical. This means we can meet all current requirements and provide clear paths for future scalability with all options available.

The future and ensuring ROI

These emerging 25G/50G/100G technologies have adapted well to the diverse needs of the market and led the trend of the industry in turn. However the many varying standards and connectors are still highly confusing to most people.

It seems increasingly likely to me that the SFP+/28/56 form factor will become the dominant one. However, you don’t need to throw out your existing and expensive 40/80/100 QSFP-based infrastructure.

Network managers are always looking for a balance between speed, complexity, reuse and standardization to find a cost-effective solution. At Loadbalancer.org we ensure we're positioned to meet these needs — whichever path is chosen:

Hexa Core Intel® Xeon®

Adapter:

- 2 x 1GbE + 2 x SFP+

- 2 x SFP28 25GbE on Mellanox MCX512F-ACAT

Alternative options:

- 2 x 10GBASE-T Copper

- 4 x QSFP28 40GbE on Mellanox MCX314A-BCCT

Intel® Xeon® Gold

Adapter:

- 2 x 1GbE

- 2 x QSFP28 100GbE on Mellanox MCX516A-CDAT

Alternative options:

- 4 x SFP28 25GbE on Mellanox MCX512F-ACAT

- 4 x QSFP28 40GbE on Mellanox MCX314A-BCCT

- 2 x QSFP28 50GbE on Mellanox MCX516A-GCAT