Global Server Load Balancing (GSLB) allows you to improve network performance by distributing internet or corporate network traffic across servers in multiple locations, anywhere in the world.

This document provides a comprehensive guide to Global Server Load Balancing (GSLB), including understanding, using, and ultimately configuring GSLB.

Why Loadbalancer.org for GSLB?

The Engineers' choice for smarter load balancing

Table of contents

- GSLB explainer

- Global versus local server load balancing

- GSLB benefits

- How GSLB works with DNS

- How GSLB load distribution works

- GSLB network diagram

- GSLB health checks

- Common GSLB use cases

- GSLB example: object storage

- How to configure GSLB

What is Global Server Load Balancing?

Global Server Load Balancing (GSLB) is the methodology used to distribute internet and corporate network traffic across servers located in multiple locations, anywhere in the world — regardless of where those servers are located.

For example, in an organization’s own data center or hosted in a private or public cloud.

The end result of GSLB is improved network performance, increased reliability, and high availability, for a superior user experience.

Just as a load balancer distributes traffic between connected servers in a single data center, GSLB distributes traffic between connected servers in multiple locations. If one server, in any location, fails, or if an entire data center becomes unavailable, GSLB reroutes the traffic to another available server somewhere else in the world. Equally, GSLB can detect users’ locations and automatically route their traffic to the best available server in the nearest data center.

Demand for GSLB has grown significantly in the last three years, as large numbers of organizations have migrated away from traditional on-premise systems and have instead created hybrid cloud and hosted environments. Many have also made the strategic decision to split their data resources across multiple locations to improve business resilience and reduce costs.

In all these instances, GSLB allows organizations to deliver a high-quality, reliable experience for users, no matter where they are in the world — no matter where their applications and data are located.

As such, it's no wonder that almost all large-scale, global businesses, especially those in e-commerce, finance, and content delivery, are believed to rely on GSLB for optimal performance and availability. Meanwhile, a significant number of medium-sized businesses are also likely to be leveraging GSLB for their critical operations or global customer base.

Although GSLB usage is less commonly employed by small businesses, as their online footprint and traffic demands increase, so too does their interest in Global Server Load Balancing (GSLB) techniques.

What is the difference between Global and Local Server Load Balancing?

Local Server Load Balancing (SLB)

The need for local load balancing arises when we have an application or service which has a demand placed upon it that cannot be provided by a single server.

Another server is added to perform the same function, effectively doubling the number of requests that can be handled; it is at this point that you should also add a load balancer to the solution. The reason for this is that as soon as you add a secondary server and create a pool of devices providing your service, you introduce a problem. That problem is "how should requests be distributed between the servers?".

There are myriad ways to solve this issue, but the best and simplest is to use a load balancer. The need for Global Server Load Balancing (GSLB) can arise from a number of use cases, including but not limited to:

- Reduced latency by sending requests to the closest available servers

- Additional redundancy of a workload that is replicated between multiple data centers

- Data sovereignty for information that must be kept within certain localities and/or geographical boundaries

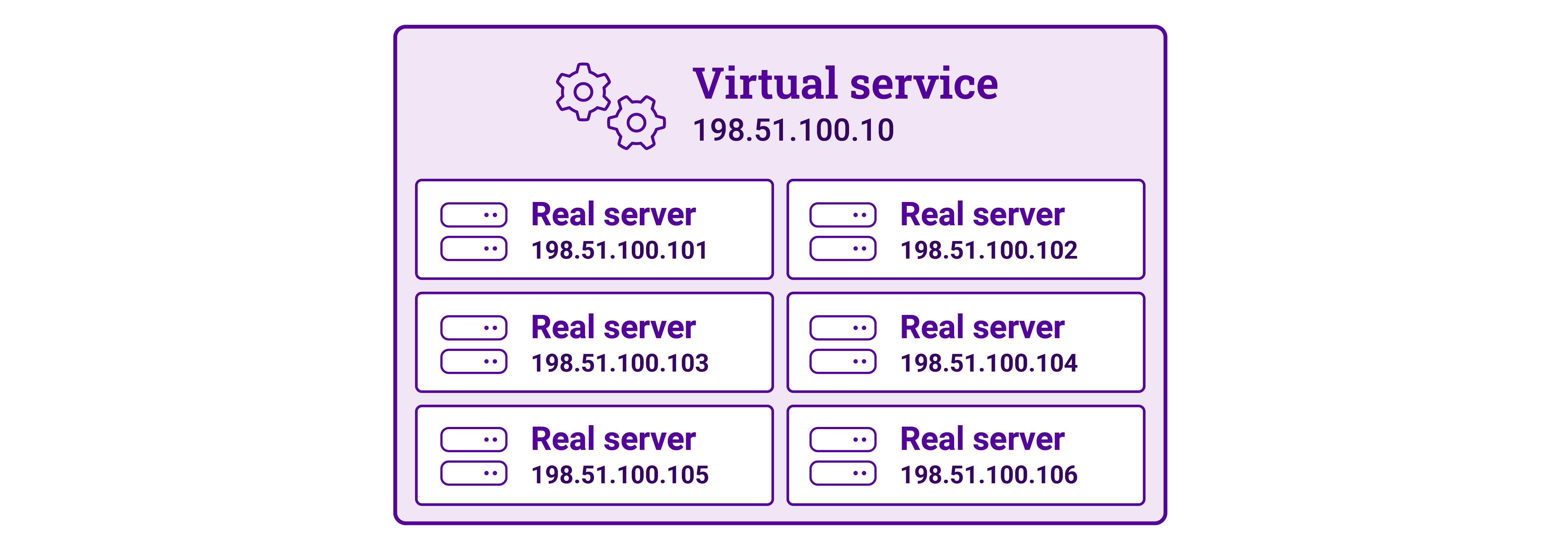

With local Server Load Balancing (SLB) there is a Virtual Service with a corresponding Virtual IP address (VIP) that receives incoming requests and a Pool of Real Servers to distribute the requests to.

How Local Server Load Balancing (SLB) works

1. A client sends a connection to the VIP.

2. The virtual service evaluates the request and sends it to a Real Server.

Global Server Load Balancing (GSLB)

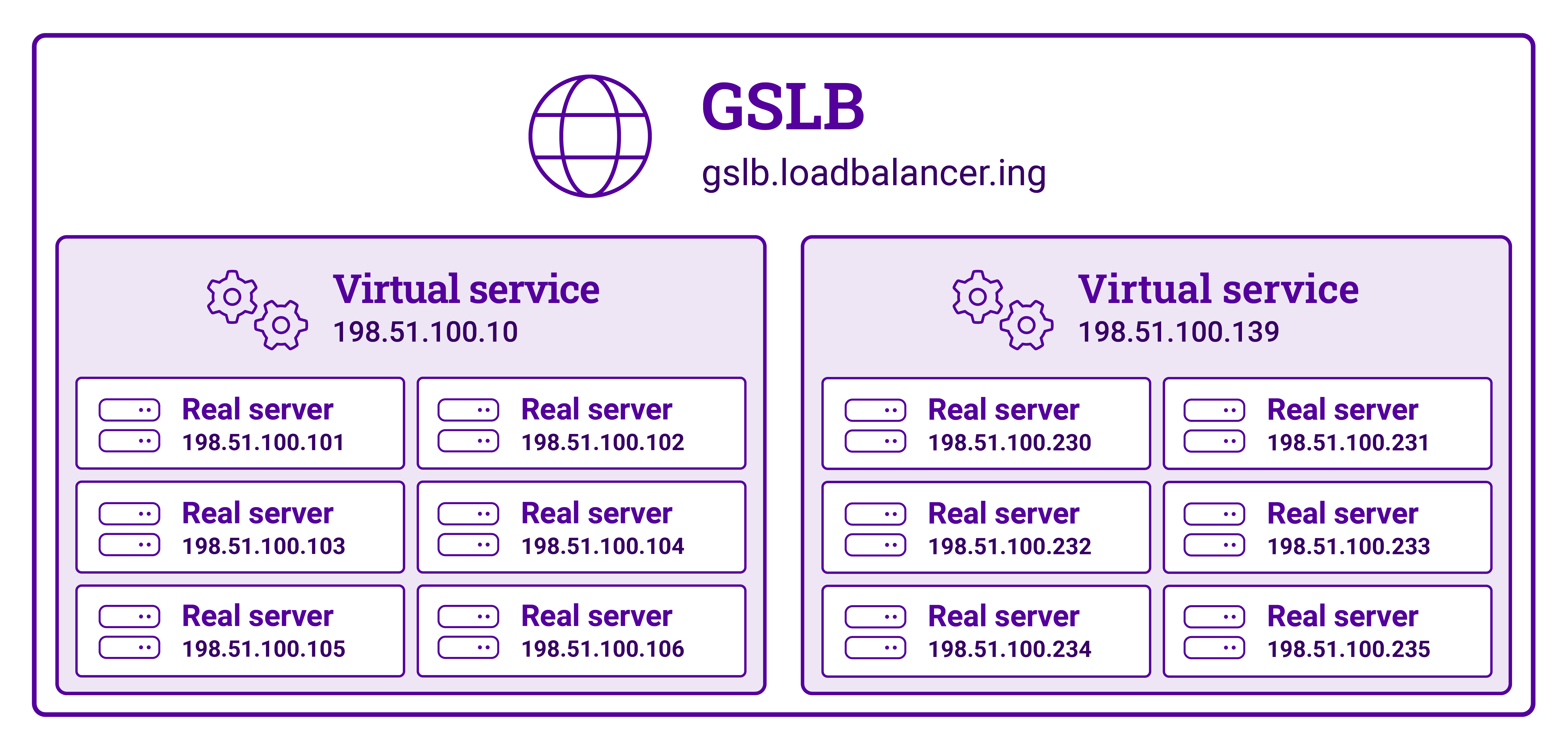

With Global Server Load Balancing (GSLB) there are multiple sites each with a Virtual Service and associated Real Servers.

Each site functions the same as with local load balancing. The difference with GSLB is that the initial connection point has moved upwards.

GSLB is configured with a Global Name or FQDN (e.g. gslb.loadbalancer.ing) that resolves to one of the VIPs depending on how GSLB is configured. The client then connects to the selected VIP and is load balanced to one of the associated Real Servers in the normal way.

What this diagram shows is that Global Server Load Balancing (GSLB) is load balancing Virtual Services. That is, it is load balancing load balancers.

How Global Server Load Balancing (GSLB) works

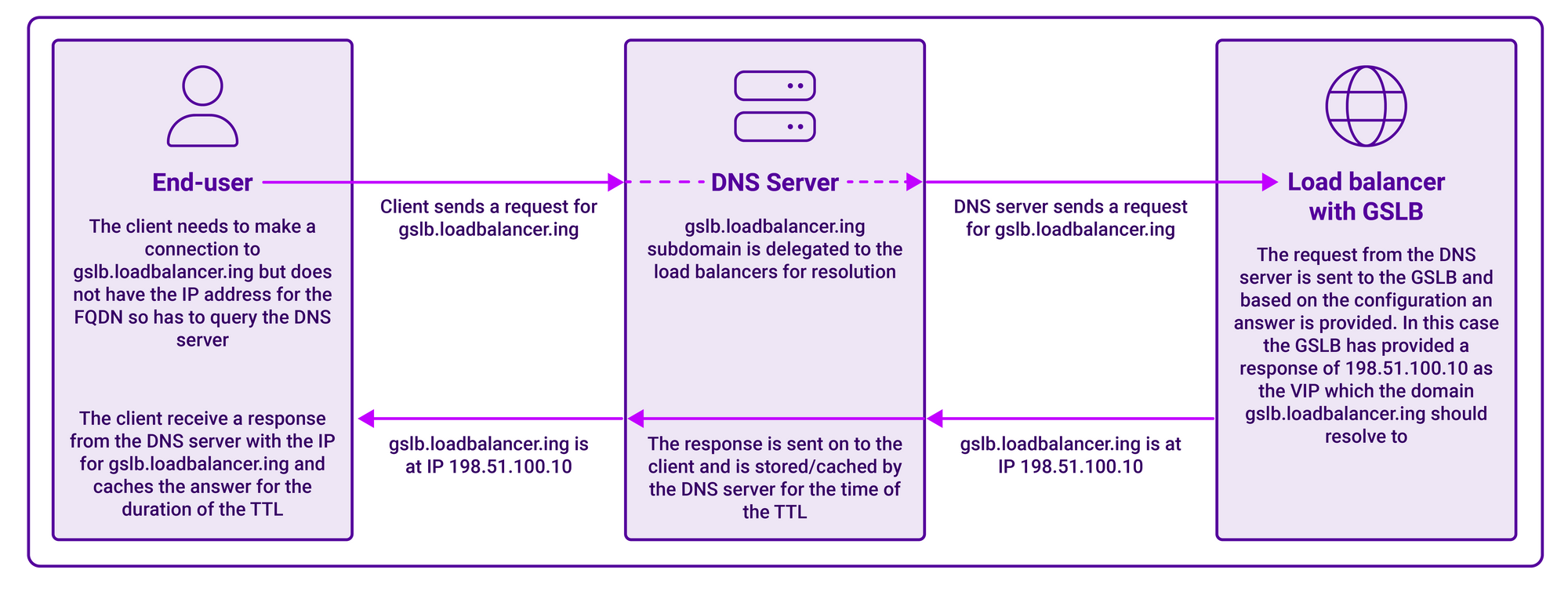

With Global Server Load Balancing (GSLB), a number of DNS-related processes must be performed first before the traffic can be passed to the correct destination.

- The client needs to make a connection to gslb.loadbalancer.ing but does not have the IP address for the FQDN so has to query the DNS server.

- Client sends a request for gslb.loadbalancer.ing.

- The gslb.loadbalancer.ing subdomain is delegated to the load balancer(s) for resolution.

- The DNS server sends a request for gslb.loadbalancer.ing.

- The request from the DNS server is sent to the GSLB and based on the configuration, an answer is provided. In this case, the GSLB has provided a response of 198.51.100.10 as the VIP which the domain gslb.loadbalancer.ing should resolve to.

- The response is sent on to the client and is stored/cached by the DNS server for the time of the TTL.

- The client receives a response from the DNS server with the address for gslb.loadbalancer.ing and caches the answer for the duration of the TTL.

Please also refer to the diagram below:

What are the benefits of GSLB?

GSLB (Global Server Load Balancing) operates at the DNS level, allowing you to direct users to the nearest or most available server based on factors such as geographic location, server load, and network latency.

This results in two main benefits:

- Improved performance

- High availability

Improved performance for a more responsive user experience

Optimized GSLB traffic distribution means reduced latency and improved response times for end users.

Global Server Load Balancing (GSLB) controls which users are directed to which data centers. It offers sophisticated topography functionality that enables organizations to easily route user traffic to the nearest server, thereby minimizing unnecessary bandwidth consumption, reducing the distance of the ‘hop’ that user requests have to travel and speeding up server responses.

Equally, organizations can use GSLB to direct user traffic to specific local data centers to enable them to deliver localized content, relating to the geographic location of the users, or meet country-specific regulatory or security requirements.

High availability for a more reliable user experience

Reduced downtime and service interruptions, and automatic failover from one site or country to another mean seamless service delivery with GSLB.

Global Server Load Balancing (GSLB) improves the resilience and availability of key applications, by enabling all user traffic to be switched instantly and seamlessly to an alternative data center in the event of an unexpected outage. Organizations can use GSLB to constantly monitor application performance at geographically separate locations and ensure the best possible application availability across multiple sites.

When routine maintenance is required, organizations can also use GSLB to temporarily direct user traffic to an alternative site, avoiding the need for disruptive downtime.

How does GSLB work with DNS?

Global Server Load Balancing (GSLB) is built on and with open-source software. There are two core components: PowerDNS and Polaris.

PowerDNS

PowerDNS is an open-source DNS server software designed to provide a robust, scalable, and versatile platform for routing internet traffic. It is widely used for its high performance, ease of management, and flexibility, supporting various back-ends such as BIND, MySQL, PostgreSQL, and Oracle. PowerDNS features a strong security model and is capable of handling a vast number of queries per second, making it suitable for deployment in high-traffic environments.

It is also known for its advanced features like DNSSEC for securing DNS lookups and a versatile API for automation and integration with other tools and systems.

Polaris

The Polaris GSLB project provides an open-source Global Server Load Balancing (GSLB) solution that can be extended for high availability across data centres and beyond. Polaris enhances the capabilities of PowerDNS Authoritative Server with features such as load-balancing methods, customizable health monitors, dynamic server weighting. It supports running health checks against different IPs from the Member IP allowing a single Health Tracker to serve multiple DNS resolvers.

Its setup is designed to be elastic and asynchronous, providing non-blocking communication between its internal components.

DNS infrastructure

GSLB relies heavily on DNS. However, the appliance cannot take control of DNS requests and responses on its own. The network needs to be configured to pass DNS requests for the appropriate subdomains to the Global Server Load Balancing (GSLB) on the load balancer appliances.

Whilst it is not technically a core component of the GSLB, it is a key part of the process, and needs to be understood for a successful implementation.

How does GSLB load distribution work?

There are a number of different load distribution methods that can be employed with Global Server Load Balancing (GSLB).

Failover Group load balancing method (fogroup)

The Failover Group (fogroup) load balancing method is a chained failover. That is, the Pool Members are configured in an order of preference. If a Member is marked as down due to a health check failure, the GSLB will use the next highest-priority Member of the Pool.

Failover Group (fogroup) example

For example, assume there are three Members in a Pool that is configured with the LB Method set to fogroup. Connections will be sent to Member 1 at all times as it is the first configured Member in the Pool.

If Member 1 fails then connections are sent to Member 2 as the second configured Member in the Pool.

If Member 2 also fails then all the requests will then be sent to Member 3.

Now, if Member 1 were to come back online (Member 2 is still down) then all connections would be sent to Member 1 again.

Weighted round robin load balancing method (wrr)

When Weighted Round Robin (wrr) is employed as the LB Method for a Pool, all the requests will be distributed amongst the Pool Members. The distribution of requests is performed in accordance with the weighting that each Member is given. The weight of the Member directly relates to the ratio of requests that should be sent to it relative to the weight of the other Pool Members.

It is worth noting that the distribution of requests with regard to the weighting is eventually consistent as requests are sent in batches of three.

Weighted round robin (wrr) example

For example, assume there are three Members in a Pool that is configured with the LB Method set to fogroup. Connections will be sent to Member 1 at all times as it is the first configured Member in the Pool.

If Member 1 fails then connections are sent to Member 2 as the second configured Member in the Pool.

If Member 2 also fails then all the requests will then be sent to Member 3.

Now, if Member 1 were to come back online (Member 2 is still down) then all connections would be sent to Member 1 again.

Topology Weighted Round Robin load balancing method (twrr)

Topology Weighted Round Robin (twrr) is the same as wrr, but with an additional piece of evaluation. Before the requests are distributed based on the weighting of the Pool they are evaluated against a topology table. Topology tables are configured in the system and are used to associate Members with particular IP addresses or ranges.

This feature allows administrators to preferentially set the destination for a request based on where the request is coming from. When a DNS request is received by the GSLB, one of the pieces of information that is available to it is the IP or subnet of where the request came from.

Topology weighted round robin (twrr) example

Let’s say you have a data centre in London (10.100.100.10) and a data centre in Madrid (10.200.200.10). The users are also based in the UK (10.100.10.0/24) and in Spain (10.200.20.0/24). Either of the data centres is able to service requests from any user and GSLB is being used to increase redundancy and availability. During normal working, it makes sense for requests to be handled by the data centre which is geographically closest to the requester.

This can be achieved by configuring a topology named "Spain" that contains the IPs 10.200.200.20 and 10.200.20.0/24. The topology tells the GSLB that requests which are sourced from 10.200.20.0/24 should be sent to the Member 10.200.200.20 as they are linked in the topology table. If the Madrid data centre Member was failing health checks then the request would be sent to another valid Member in the topology table such as the London data centre.

In the case that there are no available Members the GSLB would revert to the fallback method which can be either refuse where the DNS request is simply refused or anywhere a random failing Member is sent as a response.

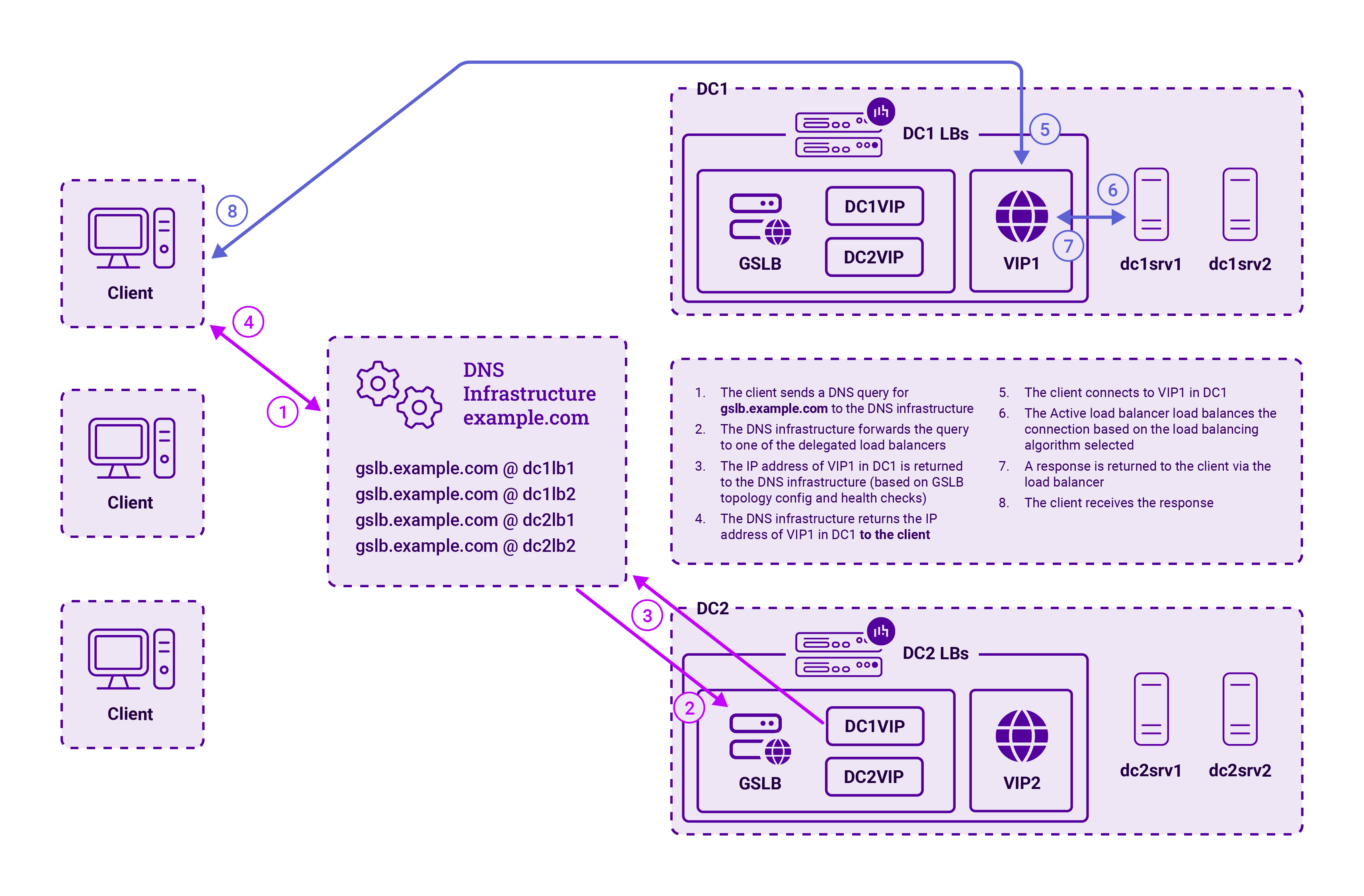

What does a GSLB network diagram look like?

GSLB architecture can be complex, as such I've taken the liberty of creating a simplified version here for ease of understanding:

What are GSLB health check types?

Global Server Load Balancing (GSLB) systems typically offer a variety of health check methods to assess the availability and performance of backend servers.

GSLB HTTPS health checks

HTTP health checks allow the GSLB to make an HTTP GET to the Member IPs (or their configured monitor IP, if set) to query their status. The configurable options for this health check are listed below. The HTTP GET that is made is expecting an HTTP code response which will be used to evaluate whether the health check has passed.

GSLB TCP health checks

TCP health checks perform a TCP connect against the Member IPs (or their configured monitor IP, if set) to check if the GSLB is able to connect to the port of the Member. If the port is open/responds then the health check passes. You can optionally provide a send string and an expected response to that string to evaluate the status of the health check.

GSLB Forced health checks

Forced health checks will always evaluate as 'passing' or 'failing' depending on the configuration that has been applied.

GSLB External health checks

The power and the possibilities of health checking are really unleashed with the external monitor. External monitors are health check scripts that can be created by the administrator to perform any logic that can be programmed into the GSLB. For example, you can create a script that checks a URL that requires authentication.

GSLB External dynamic weight health check

External Dynamic Weight health checks take the power of External health checks and then also provide the ability to update the weight of the Members based on the results of those checks. The weights are changed dynamically.

As an example, a storage vendor could expose a metric based on disk, network, and CPU utilization of their nodes and make that information available to be queried by the GSLB external health check.

Based on that metric the health check can compute and update the weighting that is set on that Member. Members with low utilization can have their priority raised, reducing the amount of work that high-utilization Members are having to do. This allows you to proactively flatten the distribution curve for the Members providing a better user experience.

For examples of how these health checks work and more, download our Global Server Load Balancing (GSLB) manual:

What are the common use cases for GSLB?

Global Server Load Balancing (GSLB) isn’t just a technical concept; it’s a key player in keeping services running smoothly, regardless of geographic boundaries. We’ll look at how it helps in scenarios like improving performance globally and ensuring service continuity during server outages.

By understanding these real-life applications, we’ll see how GSLB is more than just a theory – it’s an essential component in modern digital infrastructure, making our online interactions seamless and reliable.

GSLB use case: Business continuity and disaster recovery

GSLB serves as a pivotal technology in disaster recovery strategies, as it provides a geographically distributed approach to directing traffic across multiple data centres.

In the event of a local outage, GSLB can automatically reroute end-users to the nearest operational site with minimal disruption. This not only ensures continuous availability and business continuity but also helps to balance the load effectively during peak traffic times, thus maintaining performance standards.

By using GSLB, organizations can protect their operations from regional failures, effectively manage traffic during planned maintenance, and distribute client requests according to customized policies that align with their specific disaster recovery objectives.

GSLB use case: Content localization and latency

GSLB provides a way to perform content localization, which is a feature that enhances the end user experience by directing traffic to a preferred location. In the Loadbalancer.org appliances this is achieved with topologies and the twrr balancing method.

This localization is crucial for delivering content rapidly and reducing latency. This capability allows businesses to offer a tailored experience, with faster loading times.

GSLB use case: Compliance and geo load balancing

For organizations needing to comply with regional data protection regulations, GSLB can be an important piece of the solution. Users can be directed to the appropriate data centre based on their geographic location, ensuring that data storage and processing adheres to local compliance mandates, such as the GDPR in Europe or CCPA in California.

"Geo-awareness" is critical for multinational companies that must navigate a complex web of international laws. GSLB can help automate the process of routing traffic to comply with each jurisdiction’s unique data residency requirements, thereby reducing the risk of costly legal penalties and enhancing customer trust.

GSLB use case: Data sovereignty and geo load balancing

GSLB can plays a vital role in maintaining data sovereignty, ensuring that digital information is stored and processed within the legal boundaries of a specific geography, country or region. GSLB facilitates this by routing user requests to data centres located within the appropriate geographical limits.

Using this functionality, organizations can guarantee that data residency and sovereignty policies are upheld, which is particularly significant for entities operating across multiple jurisdictions with stringent data protection laws. This strategic routing minimizes legal risks and aligns with national regulations, providing a robust framework for global data management.

GSLB use case: Scale-out NAS and subnets

One of the specific use cases that we are able to speak on with some authority is that of scale-out NAS. We are partnered with a number of storage providers, most notably with Cloudian through an OEM load balancer called HyperBalance.

The GSLB is deployed widely with object storage providers. The clusters that they deploy are usually replicated between data centres. As the data matches between all the locations all the GSLB options and methods are available.

Usually, the installation method is the typical method of GSLB implementation and topology weighted round-robin is applied so that users are directed to a preferred data centre.

This implementation allows the administrators to set which users/subnets should go to which data centre in the first instance. If the nearest data centre is failing health checks then the requester will be transparently directed to another data centre within the cluster.

Can you provide a real world example of using GSLB for object storage?

Let's take the real-world example of object storage, and consider how we might deploy GSLB.

Global Server Load Balancing: An object storage example

For argument's sake, let's say you are an object storage provider with storage clusters located at multiple data centers. Consequently, you need load balancers to balance the traffic within your data centers AND need load balancers to balance the traffic across data centers at different locations.

Regardless of which load balancing approach object storage providers adopt within their data centers — whether they use Layer 4 DR, Layer 7 SNAT or SDNS — a standard GSLB configuration can be used to share traffic across geographically dispersed sites.

GSLB is commonly used by object storage providers that replicate their stored data across multiple sites and want to allow their users to be able to access data from any object storage cluster at any of their sites.

GSLB receives all incoming traffic and then decides which data center to route the individual packets to. In the data centers themselves, the packets are received by the local load balancers, which then use Layer 4 DR, Layer 7 SNAT or SDNS to decide which storage nodes will serve up the data requested.

GSLB can also be configured in a variety of other ways including weighted round robin and topology weighted round robin.

Weighted round robin GSLB

In a standard round robin approach, a load balancer configured with GSLB will allocate traffic in a circular, sequential way to all data centers, one after the other, so each site will receive the same number of requests. For example, a request from a client in London could be routed by a load balancer using GSLB to a Southampton, Newcastle or Reading data center.

Challenges can, however, arise if one data center has more or less capacity than another. In such situations, one data center can become overwhelmed, while another is underutilized.

The weighted round robin method can be used to assign weights to each data center based on the number of available physical servers or traffic-handling capacity. Traffic is then distributed to the data centers in a more intelligent way, based on each data center’s ability to respond effectively.

Topology weighted GSLB

When typology weighting is used, the load balancer will consider the source of the request when making the decision about where to direct it. So, for example, a rule could be established to instruct the load balancer to send users from the London IP range to the Reading data center in the first instance, while users in the Portsmouth area could be directed first to the data center in Southampton.

If the Reading data center were to fail a health check, indicating that the Reading servers had either gone offline or were busy, then the load balancer would direct London IP traffic to Southampton or Newcastle instead. This is, in effect, a round robin method, but with preferential requests taken into account.

Using topology weighting to reduce the physical distance between the client and the object storage cluster in the data center will ultimately improve response times.

This improvement in latency will be most noticeable when an object storage provider has data centers in different countries and topology weighting

is used to ensure that a request from a user in the UK is not unnecessarily routed to a storage cluster in the USA, if another data center is geographically closer.

Topology weighting can also be used to overcome country-specific data governance restrictions. If, for example, a country does not permit certain types of data to be stored outside of the borders of that country, the load balancers can be configured to ensure that users are only served by a specific in-country data center.

GSLB direct-to-node: An object storage example

Now, let's say you want to load balance storage nodes within a single data center.

There are three main approaches to using load balancers within data centers to

balance traffic across object storage nodes. No single deployment method is best for all storage environments, so object storage providers will need to carefully weigh up the pros and cons of each method.

One of these methods is GSLB direct-to-node, while the others are Layer 4 Direct Routing (DR) mode, and Layer 7 Source Network Address Translation

(SNAT) mode. For the purposes of this blog, let's assume we want to consider GSLB direct-to-node. Global Server Load Balancing (GSLB) is typically used to share traffic equally across two or more data centers that are geographically separate.

However, it can sometimes be used in a non-standard way within a data center to enable traffic to travel to and from storage nodes, bypassing the load balancer completely. This deployment methodology is known as GSLB Direct-to-Node, or SDNS for short.

The client will query its configured DNS servers for an IP address to send the request to. The DNS infrastructure will, in turn, delegate requests for the configured hostname to the load balancers. The load balancer decides

which storage node (a virtual or physical server) is available and best able to process that request quickly.

Then, the load balancer notifies the client of this decision, and the client responds by sending its packet directly to

the allocated node. The server replies, delivering the requested data directly back from the node to the client, bypassing the load balancer for this return leg of the process too.

With SDNS, load balancers can be set up to perform dynamic weighting. This is the

ability to change how requests to nodes in the cluster are distributed based on health checks or API calls to the cluster. If a node in a cluster is running out of storage, the load balancer can give it a lower weighting so fewer requests go to it, which improves the overall performance of the object storage solution.

SDNS is ideal for object storage providers with really large clusters of twenty or more nodes. As the load balancers only handle DNS traffic, and are not in path in either direction, they cannot create a bottleneck if traffic volumes are exceptionally high.

If an object storage provider has twenty storage nodes capable of connecting at ten gigabytes per second, for example, this is theoretically too much for a load balancer with a throughput capacity of 100 gigabits per second. In reality, it is almost unheard of for organizations to experience sustained traffic at this level, but with data levels increasing, these huge workloads are coming. SDNS provides a way of resolving this scalability challenge.

As with Layer 4 DR, hardware and throughput requirements will be lower, enabling object storage providers to reduce the specification and cost of load balancing. Throughput is not limited by the load balancer, but it will still

be limited by the capacity of the network.

There are, however, some significant disadvantages to SDNS. If object storage providers previously used Layer 7 SNAT and then decided subsequently to move to SDNS, they would lose the ability for their load balancers to make intelligent routing decisions and use content switching.

Object storage providers would also lose the ability to achieve and manage persistence effectively. It is possible to emulate persistence by increasing DNS time-to-live (TTL) settings and extending the time that a cache is held. This approach will, however, direct traffic to the same node all the time, so is more likely to create hot nodes, with some nodes carrying a heavier load than others over time.

Another challenge results from client-side DNS caching. Clients can remember the DNS addresses previously provided by the load balancer and repeatedly direct requests to the same location, without following the load balancer’s

instruction.

If organizations typically have high volumes of requests from a single client, in a short period of time—sometimes known as ‘bursty’ workloads—all these requests could go to just four nodes in a cluster of more than twenty.

These nodes would become overwhelmed, affecting their performance, while other nodes would be underutilized. For this reason, SDNS is only really suitable for object storage providers with consistent workloads, where the number of

requests is very steady.

For more on GSLB direct-to-node: read this blog to find out how it works, and when to use it.

Choosing an object storage deployment

We've seen how Global Server Load Balancing (GSLB) is a critical component in ensuring the high availability, performance, and resilience of object storage in a globally connected platform. Having said that, object storage providers will need to think carefully before deciding which load balancing deployment method to use.

Getting it right can be a technical challenge for all but the most experienced engineers. Object storage providers should, therefore, leverage the expertise of a load balancing vendor that provides good support and advice, as well as detailed documentation to support seamless implementation, and step-by-step deployment instructions.

For more on object storage deployment options check out this white paper: Deployment options for object storage.

How do you configure GSLB?

Let's assume we've done our due diligence, considered all other options, and decided that we want to go about deploying GSLB. Where do we start?

There are five key steps to follow when configuring your Global Server Load Balancing (GSLB) algorithm.

- Global names

- Members

- Pools

- Topologies

- DNS setup

For screenshots and full details, download our comprehensive GSLB manual:

Step 1: Global Names

The first step is to configure the Global Names. Global Names are the FQDNs that GSLB must respond to requests on. They must correspond to the configuration that is set in the DNS infrastructure.

Step 2: Members

Members are the individual IP addresses that a request can be resolved to and are associated to the Global Name via a Pool.

Typically and historically, the IP that is configured for a Member is the VIP for the Virtual Service that you are performing GSLB for. With increasing frequency we are seeing a requirement for the Member IPs to be configured as the node server IPs themselves. When the GSLB is configured in this way it is referred to as SDNS (Smart DNS), previously this was called GSLB direct-to-node.

Step 3: Pools

The Pool ties the elements of the GSLB configuration together. The Members are assigned to the Pool and the Pool is assigned to the Global Name. The majority of the configuration is applied within the Pool.

Step 4: Topologies

Topologies are slightly confusingly placed at the end of the UI. To configure a Pool with a LB Method of twrr you must first configure a topology for it. The simple way to overcome this little hurdle is to first define your Pool with the LB Method set to wrr, configure your topologies and then go back and update the Pool to use twrr.

Step 5: DNS delegation and entry

Before the GSLB and it’s configuration can be tested in a meaningful way, DNS must be configured. Typically, in a production environment, it’s best to configure the DNS delegation and configuration for the network before you go to configure the GSLB on the load balancer appliances. This does depend on your DNS infrastructure.

Convergence of the DNS configuration can take hours to be fully synchronized. Because of this, it is practical to complete the DNS configuration before implementing the GSLB. You must consider that it’s a double-edged sword.

If you add the DNS entries before the GSLB is configured then the network will not be able to correctly resolve those requests. Unfortunately, this can also be one of the most confusing parts of a GSLB implementation so getting it right in the first place is not always straightforward.

For applications that have a corresponding deployment guide, it’s worth reading that first as some of them contain product-specific DNS delegation guidance.

Please note the distinction made between Windows and non-Windows Servers in the GSLB manual.

Conclusion

In summary, Global Server Load Balancing (GSLB) offers a powerful way to manage global traffic, improve application performance, and increase availability. It is a critical component in any organization’s multi-data center strategy.

GSLB is not just a traffic director, but also a strategic enabler for businesses operating across dispersed geographical locations. We’ve seen its role in enhancing user experience through reduced latency, its importance in disaster recovery, and its growing integration with cloud and hybrid environments. As it grows in popularity and application, the GSLB landscape is continually evolving, adapting to new challenges and technological advancements.

As you move forward, remember that the core principles of GSLB remain constant: intelligent distribution of traffic, additional redundancy and high availability, and the seamless global delivery of services. Whether you’re managing enterprise networks, architecting cloud solutions, or ensuring the smooth operation of large-scale object storage clusters, Global Server Load Balancing (GSLB) stands as a cornerstone in your toolkit.

Additional resources

- Choosing the best option to host across multiple locations or data centers

- GSLB direct-to-node: how it works, and when to use it for your object storage deployment

- Cloudflare GSLB

- Efficient IP - What is GSLB

- EDNS-CS

- What is F5 BIG-IP GSLB?

- What is Citrix NetScaler GSLB?

- Comparing Layer 4, Layer 7, and GSLB load balancing techniques

- Active/Active for scalable redundancy