Direct Server Return (DSR), which Loadbalancer.org calls Direct Return (DR), is my favorite way to load balance application servers because it’s simple, transparent and super fast.

However, Direct Return would seem to be a mode that has fallen out of fashion. In fact, most application delivery vendors seem to hate DSR mode. Vendors often write negative articles with such titles as "The Disadvantages of DSR (Direct Server Return)" that tend to list far too many drawbacks, with very little explanation of the benefits...like this white paper from Kemp Technologies.

Why do application delivery vendors put such a negative spin on Direct Return?

Well, personally, I think it is so that they can up-sell you, to more expensive hardware or complicated functionality - that you may not actually need.

So, let's look at the Kemp white paper in more detail and see if their arguments make sense?

| KEMP'S WHITE PAPER CLAIM | MY THOUGHTS... |

|---|---|

| Backend servers have to crank up the amount of work that they must do, responding to health check requests with their own IP address and to content requests with the VIP assigned,by the load balancer. | Really? Surely the number of health checks are the same? The app often will listen on all IP's anyway. And even if it doesn't and you need to force it to listen on multiple addresses or more likely all addresses (0.0.0.0) - would the difference really be a measurable issue...? |

| Cookie insertion and port translation are not able to be implemented. | Fair comment. However, only a problem if they are actually required. And I would argue that cookies are way less reliable than source IP persistence. |

| ARP (Address Resolution Protocol) requests must be ignored by the backend servers. If not, the VIP traffic routing will be bypassed as the backend server establishes a direct two way connection with the client. | A warning - sure, just in case you forget to do this minor configuration change but is this really a drawback? |

| Application acceleration is not an option, as the load balancer plays no part in the handling of outbound traffic. | That makes no sense. You don’t need application acceleration because return throughput is maximized and offers near endless scalability. What exactly is application acceleration anyway? Normally it just means SSL termination on the load balancer i.e. increasing the load on your load balancer and decreasing the load on the cluster. That's simply not as scalable as doing it in the cluster. You are just trading one type of acceleration for the other, seems a fair swap in most typical deployments. |

| Protocol vulnerabilities are not protected. Slack enforcement of RFCs and incorrectly specified protocols are unable to be addressed and resolved. | You mean at the load balancer by a WAF or whatever right? In some cases you may have a WAF upstream or even a cluster of them for such a requirement and in others you would address security concerns at the real servers in the same way you would if running a single server so not a massive issue. And what kind of a crazy person would run a server that was vulnerable in the first place? |

| Caching needs to take place on the routers using WCCP. This means a more complex network, an additional potential point of failure and greater latency due to an additional network hop. | If you use WCCP right? I don't... The second sentence implies that you WILL NEED a more complex network rather than if you use Cisco's WCCP technology that you might. I haven’t heard about anyone using WCCP in years. Surely you would use the load balancer to replace WCCP. |

| There is no way to handle SOAP/Error/Exception issues. The user receives classic error messages such as 404 and 500 without the load balancer having the chance to retry the request or even notify the administrator. | Do you really need this? While custom error messages are nice you could always fix the problem at the real servers. I’ve never seen any demand from customers for changing error messages on the load balancer. |

What are my opinions on the matter...?

Well, I started this blog stating that "it was my favorite way to load balance traffic super fast" and I stand by that statement! It's brilliant, easy to implement and super fast, did I mention that yet?

The benefits of DSR

Full source IP transparency

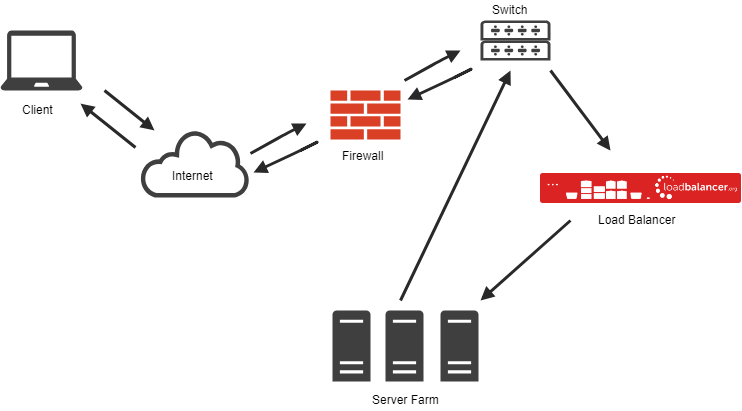

The servers see a connection directly from the client IP and reply to the client through the normal default gateway bypassing the load balancer on the return path.

No infrastructure changes required

The load balancer can be on the same subnet as the backend servers keeping it as simple as possible.

Lightning fast

Only the destination MAC address of the packets are changed and traffic Server → Client scales as you add more real servers allowing multi-gigabit throughput while using only a 1G equipped load balancer.

Cost effective

Using DSR (or any Layer 4 method) load balancing doesn’t cost much in terms of load balancer hardware. You can achieve incredible results with a load balancer costing you $4,000-$10,000 rather than potentially spending $20,000-$50,000 (or more) so that you can use Layer 7, SSL offloading and WAF in earnest.

Broad application compatibility

Not only is it a widely supported technology it can actually help some applications/protocols that need to make direct new connections back to the client such as RTSP.

COOL FACT: Did you know that - this DR was used to serve http for the Atlanta and the Sydney Olympic games and for the chess match between Kasparov and Deep Blue?

So you are probably left scratching your head thinking you would be nuts not to use DSR right? Why is nobody else telling us how great it is...?

In my opinion it’s because every customer that plans for direct server return is buying the cheap cost-effective model load balancer with no bolt-ons or extras meaning that these customers produce less revenue, much better to suggest using as many features of a modern load balancer as you can and then upsell the customer on to more powerful and expensive hardware and support contracts to use it all.

So, are there any real drawbacks to be concerned about…?

Well...there are a couple of drawbacks...

- You won't have access to Layer 7 processing at the load balancer.

- Persistence will be restricted to source IP or destination IP methods, so no cookie based persistence.

- SSL offloading or WAF’s at the load balancer are not suitable because they will need to see both inbound and outbound traffic so you would need to run these if required on different hardware.

- Some operating systems or closed appliances may not allow a way to successfully resolve the ARP problem or to install a loopback adapter, but most will!

Should you use DR where possible?

Yes, most definitely!

Its cost of ownership is very attractive and configuration is simple without you needing to adjust routing or lose source IP transparency.

Leave a comment below if you have any questions or check out some more of our DSR blog posts here.

2020 update

Want to know more about how Layer 4 DR mode works, and why it's awesome? Check out this video: