Layer 4 load balancing is personally my preferred way of enhancing application delivery, but there’s more than one architectural approach available to you. When engineers think of Layer 4 load balancing, they often think of Layer 4 DR mode, but there are other Layer 4 modes worth considering, depending on your use case!

This document provides a comprehensive guide to Layer 4 load balancing, including understanding, using, and ultimately configuring Layer 4 DR, NAT, and SNAT. And good ‘ol TUN also gets a mention too!

Table of contents

- What is Layer 4 load balancing

- Layer 4 load balancing protocols

- Pros and cons of each Layer 4 load balancing algorithm

- Why Layer 4 DR is not always the answer

- How Layer 4 load balancing works

- Benefits of Layer 4 load balancing

- Common Layer 4 use cases

- How to configure Layer 4 load balancing

- Pairing Layer 4 load balancing with Layer 7

Not sure which solution you need?

Compare Layer 4, 7 and GSLB

What is Layer 4 load balancing

Layer 4 load balancing is a way of distributing application or network traffic across multiple servers. The end result is high availability, improved network performance, and more scalable applications and services.

But wait (I hear you ask) isn’t that also what Layer 7 load balancing does? Well yes, but the way it does this is very different.

It operates at the Transport Layer (Layer 4) of the OSI model, primarily using information from TCP (Transmission Control Protocol) and UDP (User Datagram Protocol) headers. This includes source and destination IP addresses and ports, but not the actual content of the data packets.

Layer 7 load balancing, in contrast, looks at the actual content being delivered (not just content delivery), and responds accordingly, providing what is effectively smart routing and rerouting.

Digging deeper still, there are three main protocols available to Network Engineers and System Admins opting for Layer 4 load balancing:

- Layer 4 DR mode

- Layer 4 NAT mode

- Layer 4 SNAT mode

I’ll explain each one in turn, and also briefly touch on Layer 4 TUN.

Layer 4 load balancing protocols

Layer 4 load balancing operates at the transport layer of the OSI model, primarily using the following protocols:

- TCP (Transmission Control Protocol) - TCP is a connection-oriented protocol, meaning that before data can be exchanged, a connection must be established between the sender and receiver. This involves a process called the three-way handshake.

- UDP (User Datagram Protocol) - UDP is a connectionless and unreliable protocol, unlike TCP. This means it sends data without first setting up a formal connection or guaranteeing that packets will be delivered, arrive in order, or be error-free. There's no handshake needed to establish a connection. These two protocols make load balancing decisions without examining the packet's content.

Pros and cons of each Layer 4 load balancing algorithm

Layer 4 DR (Direct Routing) load balancing

Layer 4 DR load balancing (often referred to as Direct Server Return) is probably the method you’re most familiar with. The ‘DR’ in ‘Layer 4 DR’ stands for direct routing, and (in my humble opinion) blows the other methods out of the water when it comes to speed and scalability.

Layer 4 DR (Direct Server Return) functions by directly sending request packets to the selected servers. This is achieved by modifying the destination MAC address of incoming requests. When using DR mode, the servers send their responses directly back to the clients. The reply traffic then bypasses the load balancer, which maximizes throughput.

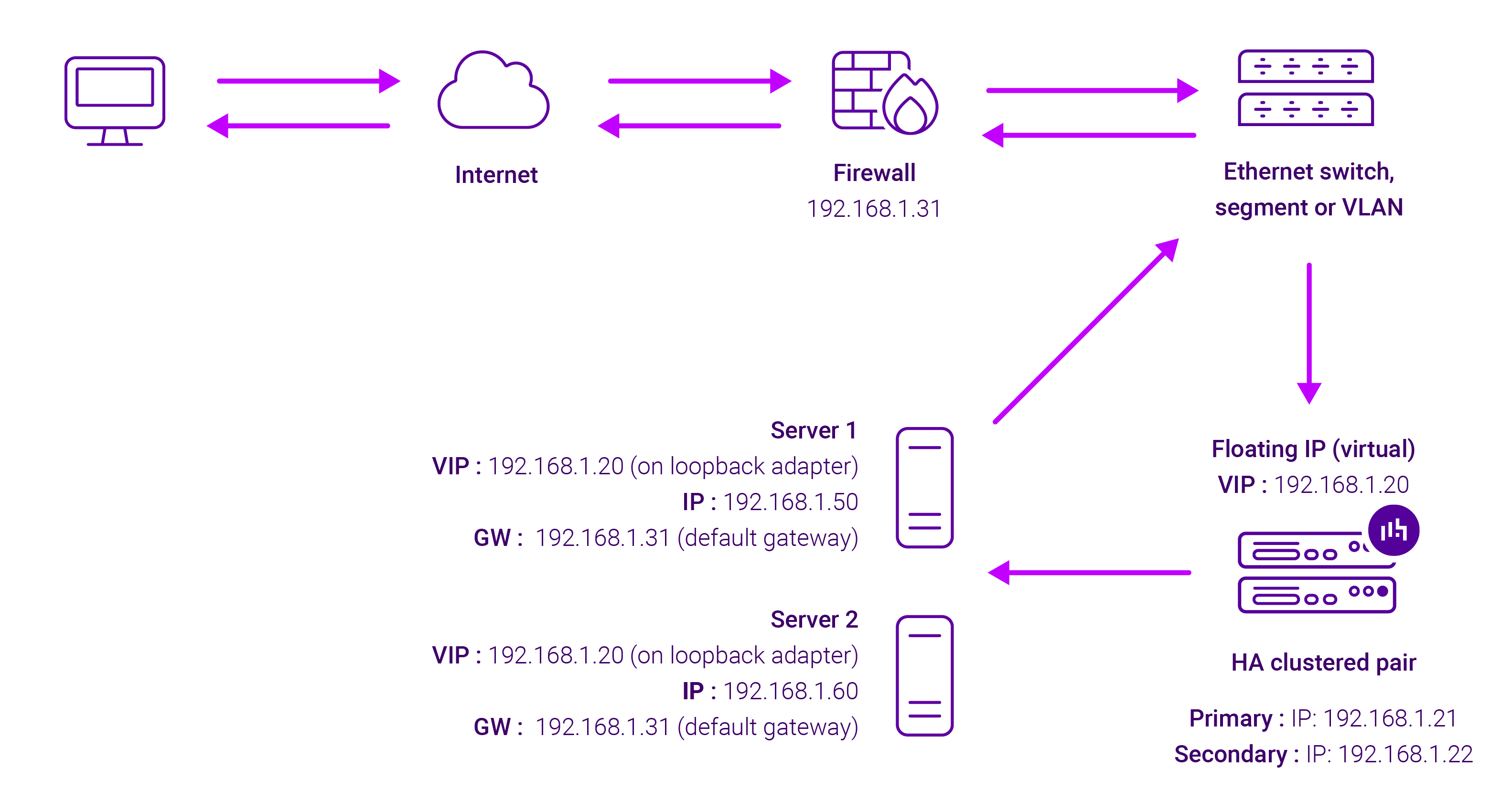

Here’s a concept diagram to illustrate how it works:

Pros of Layer 4 DR mode

Things I get excited about:

- Achieve rapid, local distribution of server traffic

- Works with your existing infrastructure, no major overhaul required

- Operates independently of your default gateway

- Offers full IP transparency

- The load balancer can reside on the same subnet as your existing servers

- This system only alters the destination MAC address of packets, enabling the use of multiple return gateways for genuine multi-gigabit throughput

Cons of Layer 4 DR mode

Things I’m not such a fan of:

- For the load balancer to function correctly, your backend servers must ignore ARP requests for the virtual IP, preventing them from diverting traffic away from the load balancer

- You can’t utilize port translation or cookie insertion with this setup

- There are scenarios where Direct Routing can't be implemented due to unchangeable application or operating system configurations

- Your backend servers can't be separated by routers from the load balancer

- Some switches have spoofing protection that's incompatible with Direct Routing mode, so you'll need to disable it

For more on Layer 4 DR mode, check out this fantastic blog written by my colleague Andrew Howe: 15 years later, we still love DSR: Why Layer 4 DR mode rocks.

Layer 4 NAT (Network Address Translation) load balancing

Layer 4 NAT mode delivers strong performance, although it doesn't quite match the speed of Layer 4 DR mode. This occurs because (unlike DR mode) actual server responses have to return to the client through the load balancer.

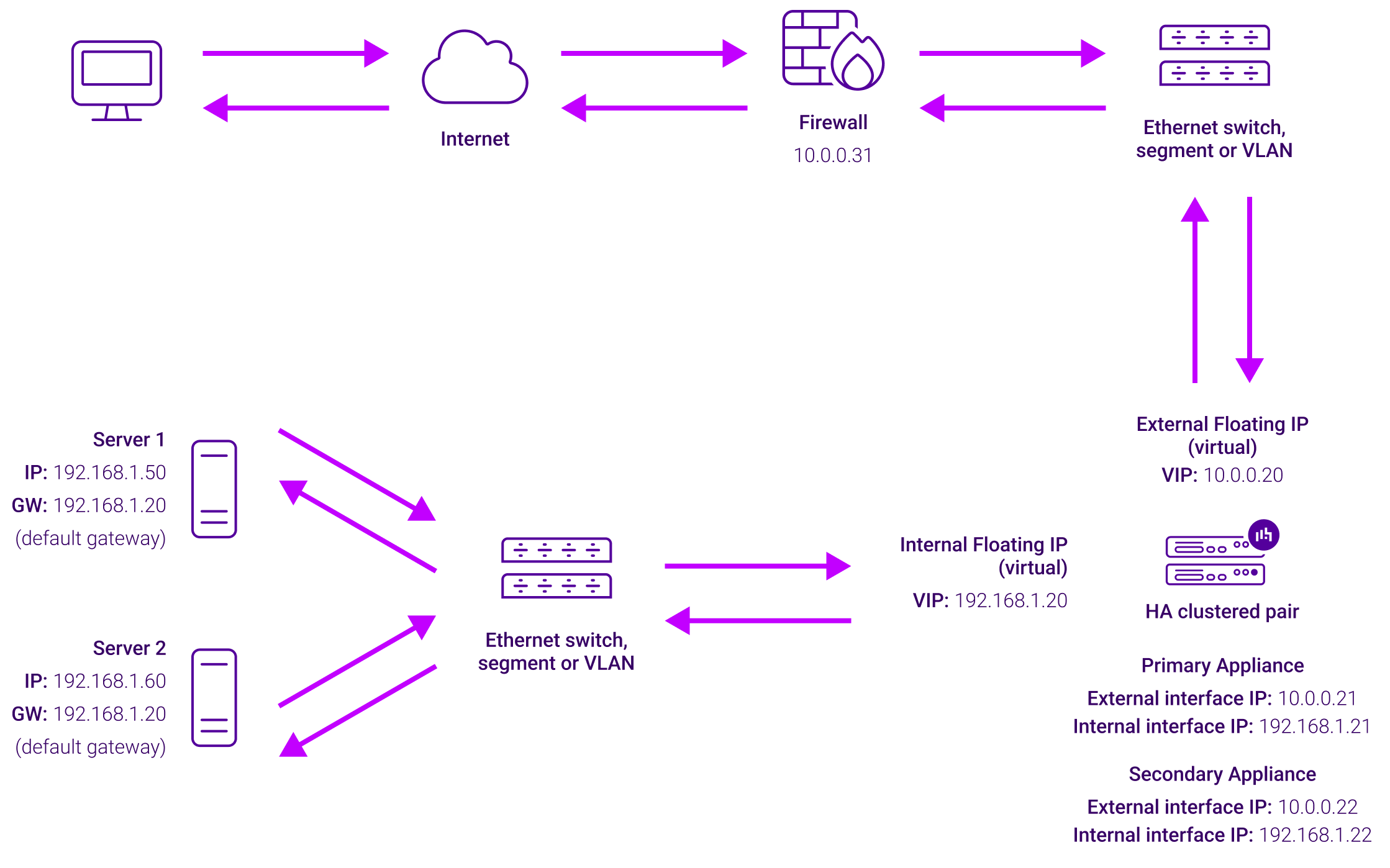

Here’s an illustration:

Pros of Layer 4 DR mode

Things about Layer 4 NAT that make me happy:

- This method works well when the host operating system can't be altered to resolve the ARP issue

- It separates traffic, offering a degree of server protection

- It's compatible with all backend servers (also known as real servers) simply by reconfiguring their default gateway to the load balancer

- It offers relatively high performance, operating much like a router, which makes it faster than a typical firewall

- It allows for translation, and reporting of traffic in both directions (inbound and outbound)

- It's transparent to the real servers, meaning server logs will display the correct client IP addresses

- You can get transparent load balancing for your application quickly and flexibly using one-arm NAT mode

- It works well in AWS cloud because DR mode isn't supported there

Cons of Layer 4 NAT mode

Things I’m not keen on:

- Protected servers can become inaccessible, requiring you to open ports in the load balancer's firewall to restore visibility

- The reporting is very basic and difficult to understand

- Real servers need to be secure, properly configured, and scalable

- Policy-Based Routing (PBR) rules might be necessary

- One-arm NAT can disrupt Windows server routing tables

- It’s not as easy to configure as Layer 4 SNAT or Layer 7

Layer 4 SNAT (Source Network Address Translation) load balancing

Layer 4 SNAT mode offers high performance, though it's not as quick as Layer 4 NAT mode or Layer 4 DR mode. With Layer 4 SNAT mode load balancing, the load balancer acts as a full proxy meaning it maintains a full connection with both the client and the backend server. All traffic, both inbound and outbound, flows through the load balancer.

When the load balancer alters the request's source IP address, the backend servers will log the load balancer's IP as the client's source, instead of the original client's IP, so it’s not transparent.

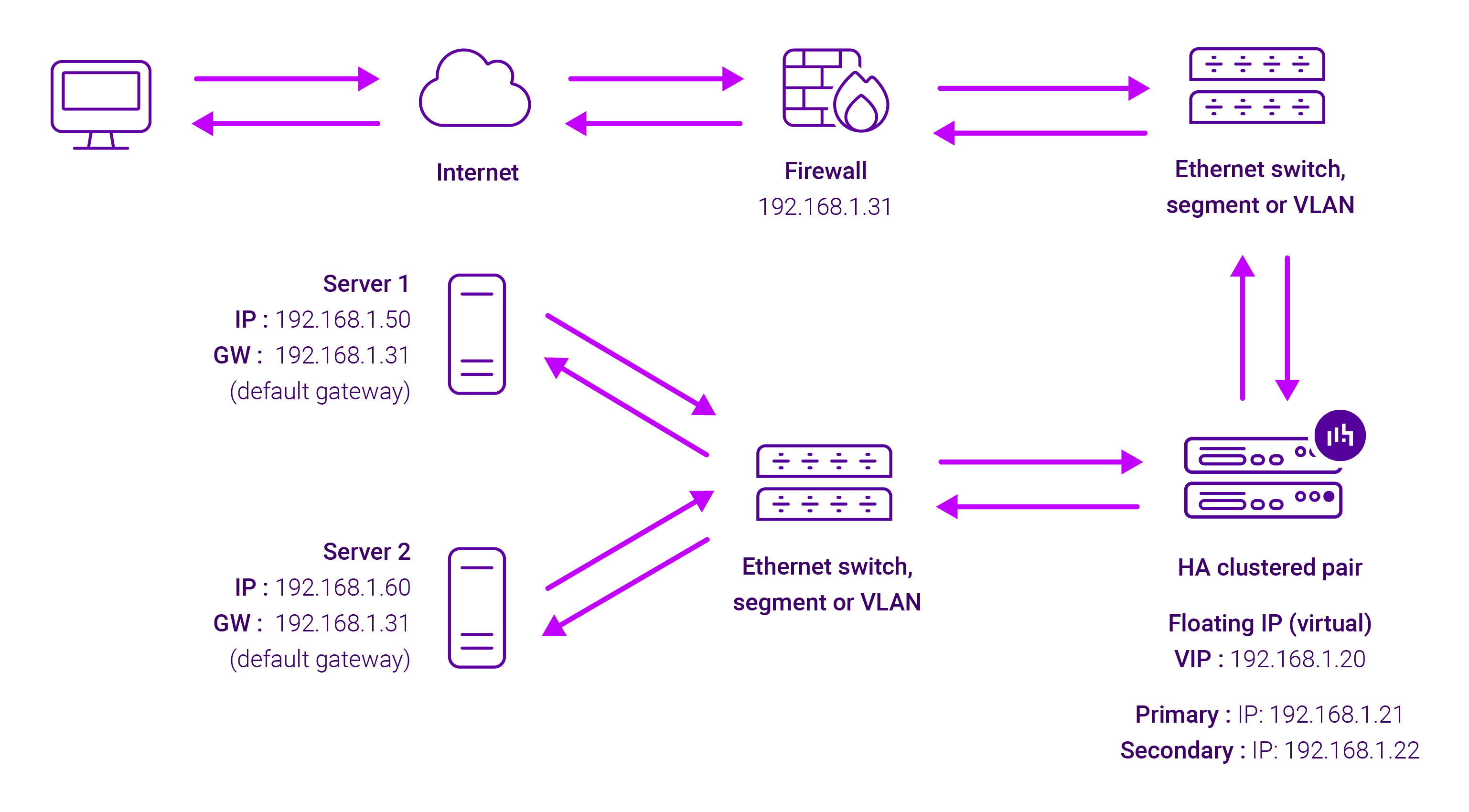

Here’s how it looks:

Pros of Layer 4 SNAT mode

Things I like about Layer 4 SNAT:

- The load-balanced servers require no modifications whatsoever

- The Real Servers require no modifications

- It's useful for load balancing UDP traffic when you don't need the source IP address to be transparent

- Port translation

Cons of Layer 4 SNAT mode

Things I don’t like about Layer 4 SNAT:

- Since TPROXY can't be used, servers won't see the client's IP address in their logs

- It's not as fast as Layer 4 NAT mode or Layer 4 DR mode

- You cannot use the same Real IP (RIP):Port combination for Layer 4 SNAT mode VIPs due to conflicting firewall rules

Layer 4 TUN (Tunnelling) load balancing

Layer 4 TUN load balancing is rarely used these days, but I’ll mention it here for completeness. I must confess though, I’ve personally only used it a handful of times.

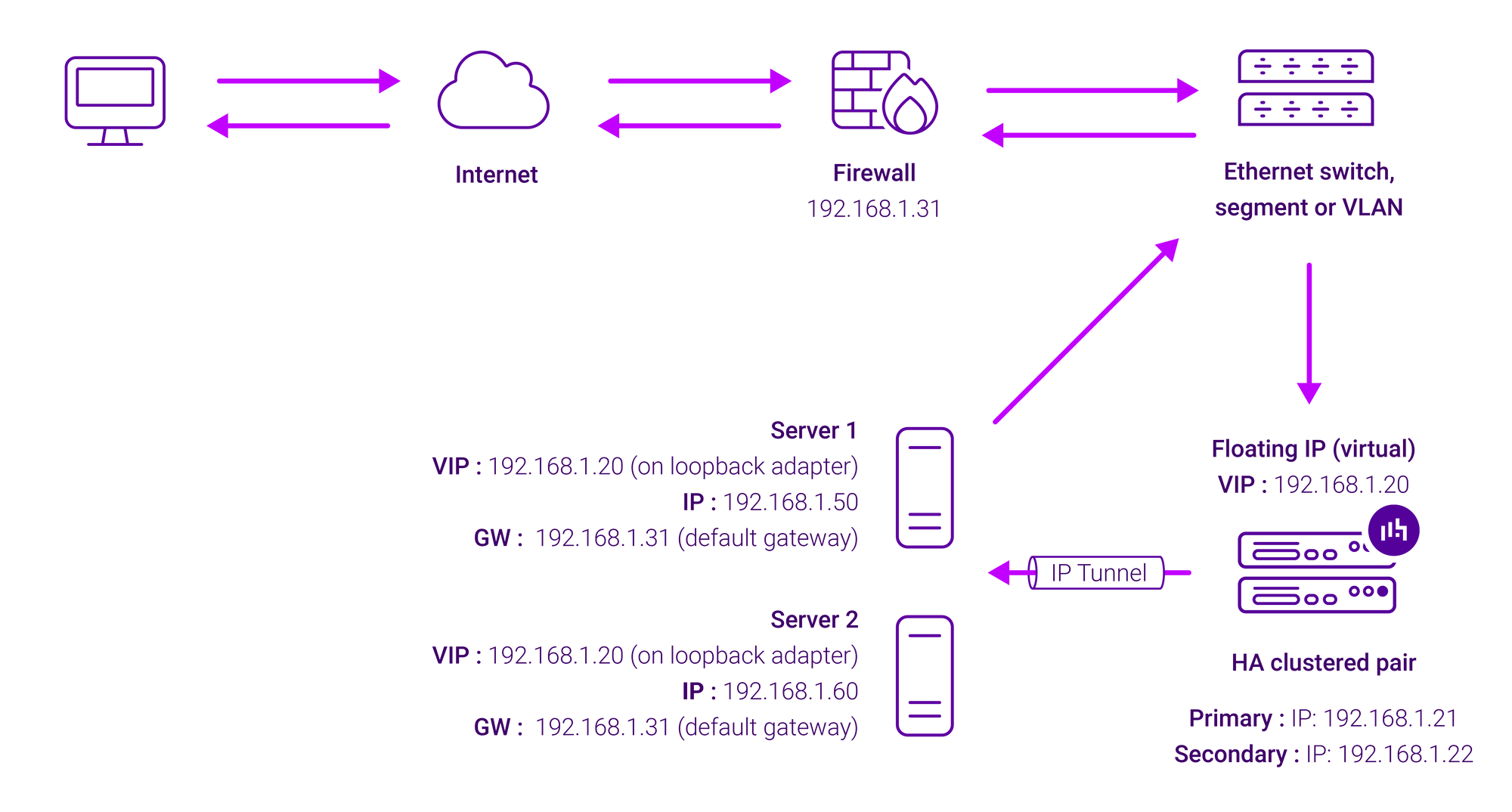

Layer 4 TUN operates similarly to Direct Routing (DR) mode, with the key difference being that traffic can be routed between the load balancer and the server. The load balancer encapsulates the client's request within an IP tunnel directed towards the server. The server receives the client's request from the load balancer, processes it, and then sends the response directly back to the client, bypassing the load balancer on the way out.

Tunnelling has somewhat limited use as it requires an IP tunnel between the load balancer and the Real Server. Because the VIP is the target address, many routers will drop the packet assuming that it has been spoofed. However, it can be useful for private networks with Real Servers on multiple subnets or across router hops.

Here’s how it works:

Pros of Layer 4 TUN mode

Good things about Layer 4 TUN:

- It's useful for creating virtual network interfaces for VPNs and other virtual networking scenarios

- This method bridges the gap when a router hop is introduced

- It can deliver transparent load balancing across the WAN

- IP encapsulated tunnels are highly secure

Cons of Layer 4 TUN mode

Not so great things about Layer 4 TUN:

- You need full control over each router hop to ensure packets are rejected as aliens

- It's difficult to configure and manage because of its complexity

- Requires specific kernel module configurations and in practice only works on Linux/UNIX servers

- Needs manual routing table entries

- The Maximum Transmission Unit (MTU) needs to be carefully handled to avoid fragmentation issues

Why do we not use Layer 4 TUN any more?

Historically, Layer 4 TUN load balancing was a popular method, particularly when hardware wasn't as powerful and applications were less complex.

These days, Layer 7 load balancing and other superior Layer 4 load balancing methods have become more prominent for web applications, although Layer 4 TUN potentially still has a role to play in load balancing performance-critical or non-HTTP/S traffic, or within multi-tiered load balancing strategies.

The bottom line is Layer 4 TUN load balancing is hard to justify over Layer 4 DR (higher performance), Layer 4 NAT (simpler to set up), or Layer 7 load balancing (offers intelligent routing).

Why Layer 4 DR is NOT always the answer

Layer 4 DR shouldn’t be your default

Yes Layer 4 DR certainly excels in the speed department — by bypassing the load balancer for return traffic, it minimizes latency and boosts overall performance. BUT (and it’s a big but…) this need for speed comes with some trade-offs; namely:

- Limited visibility: Layer 4 DR only retains very basic information about clients (i.e. IP address). This makes it not that great at managing persistence. With a limited number of IPv4 addresses many clients will be coming from behind a NAT, and some will be using a VPN. In both scenarios, many clients will present the same IP to the load balancer.

- No application awareness: Layer 4 DR only sees packets and misses out on application awareness. This means it can't do things like content switching, header rewrites, or Access Control Lists. Imagine having a website that shows different things based on the client's location – DR won’t help you here.

Tricky to setup

Even though Layer 4 DR is fast, getting it going can also be more complicated than it seems at first glance:

- Server tweaks: Layer 4 DR often means changing how your servers are set up to handle the load balancer's virtual IP address. This can mess with existing programs or operating systems, requiring extra work and testing to make sure everything runs smoothly.

- ARP challenges: DR throws a curveball with something called Address Resolution Protocol (ARP). Your servers need to be told to ignore ARP requests for the virtual IP address. This can be tricky, and any mistakes could cause unexpected problems or even stop traffic flow altogether. There's a reason why we often refer our customers to this chapter of the Manual.

- Limited growth potential: DR typically works best in simple setups where all traffic goes through one connection. This might not work well for complex networks with multiple connections or servers in different locations.

- Firewalls: Modern firewalls often see DR as a security flaw. It looks like an IP spoofing attack and makes the traffic inspection more complicated.

But if not Layer 4 DR, then what?

Your other throughput allies!

There are lots of other methods of load balancing, so you ought to give them a go too!!

- Layer 4 NAT: This offers a good balance between speed and ease of setup. NAT is still a high-performance mode, although the return traffic flows via the load balancer. NAT still provides IP Transparency which may be important for some applications. Real Servers gateway must be an IP on the load balancer to ensure correct routing of the packets.

- Layer 4 SNAT: Simple to configure, still good performance and supports UDP. Sadly no IP transparency.

Yes Layer 4 DR load balancing excels in certain scenarios, such as busy applications like video streaming that require lightning-fast speed. However, in many everyday situations, its limited features, complicated setup, and security risks can overshadow its performance advantages. So it's crucial to assess your application's requirements and seek alternative options.

How Layer 4 load balancing works

Each Layer 4 mode works differently, so in order to explain how Layer 4 load balancing works in practice, I’ll break down each one. I’ve left Layer 4 TUN out for now, but if you’re interested, drop me a message in the comments below, or check out these great resources:

- Load balancing - Linux Virtual Server (LVS) and its Forwarding Modes

- Virtual Server via IP Tunnelling

How Layer 4 DR mode works

DR mode works by changing the destination MAC address of the incoming packet to match the selected Real Server on the fly which is very fast. When the packet reaches the Real Server it expects the Real Server to own the Virtual Services IP address (VIP). This means that you need to ensure that the Real Server (and the load balanced application) respond to both the Real Server’s own IP address and the VIP. The Real Servers should not respond to ARP requests for the VIP. Only the load balancer should do this. Configuring the Real Servers in this way is referred to as Solving the ARP Problem. On average, DR mode is 8 times quicker than NAT for HTTP, 50 times quicker for Terminal Services and much, much faster for streaming media or FTP.

The load balancer must have an Interface in the same subnet as the Real Servers to ensure Layer 2 connectivity required for DR mode to work. The VIP can be brought up on the same subnet as the Real Servers, or on a different subnet provided that the load balancer has an interface in that subnet. Note that port translation is not possible with DR mode, e.g. VIP:80 → RIP:8080 is not supported, and DR mode is transparent i.e. the Real Server will see the source IP address of the client.

How Layer 4 NAT mode works

The load balancer translates all requests from the Virtual Service to the Real Servers. NAT mode can be deployed in two-arm (using 2 interfaces), or one-arm (using 1 interface). If you want Real Servers to be accessible on their own IP address for non-load balanced services, e.g. RDP, you will need to set up individual SNAT and DNAT firewall script rules for each Real Server or add additional VIPs for this. Port translation is possible with Layer 4 NAT mode, e.g. VIP:80 → RIP:8080 is supported. NAT mode is transparent i.e. the Real Servers will see the source IP address of the client.

How Layer 4 SNAT mode works

The load balancer translates all requests from the external Virtual Service to the internal Real Servers in the same way as NAT mode.

Layer 4 SNAT mode is not transparent, an iptables SNAT rule translates the source IP address to be the load balancer rather than the original client IP address. Layer 4 SNAT mode can be deployed using either a one-arm or two-arm configuration. For two-arm deployments, eth0 is normally used for the internal network and eth1 is used for the external network although this is not mandatory.

If the Real Servers require Internet access, Autonat should be enabled using the WebUI option: Cluster Configuration > Layer 4 - Advanced Configuration, the external interface should be selected.

Layer 4 SNAT requires no mode-specific configuration changes to the load balanced Real Servers. Port translation is possible with Layer 4 SNAT mode, e.g. VIP:80 → RIP:8080 is supported. You should not use the same RIP:PORT combination for layer 4 SNAT mode VIPs and layer 7 Proxy mode VIPs because the required firewall rules conflict.

Benefits of Layer 4 load balancing

When it comes to Layer 4 load balancing, its benefits extend beyond lightning-fast speed and efficiency. Here are my top 3 best things about Layer 4:

1. Performance

Layer 4 load balancing works at the transport layer, directing traffic based solely on IP addresses and ports rather than inspecting packet content. This straightforward approach enables super-fast packet forwarding and low processing overhead, making it incredibly efficient for high-volume applications and crucial for minimizing latency.

2. Protocol agnostic

Layer 4 load balancing is protocol-agnostic because it avoids examining application-layer content. This means it can efficiently route traffic for a broad spectrum of TCP and UDP protocols—from HTTP/HTTPS to SSH, FTP, SMTP, and DNS—providing exceptional versatility for various application setups.

3. Seamless horizontal scaling

At its heart, Layer 4 load balancing distributes workloads evenly across multiple servers, effectively preventing bottlenecks and keeping applications snappy. It also offers fault tolerance by automatically rerouting traffic away from any unhealthy servers. This makes seamless horizontal scaling possible, letting you easily add or remove servers as needed, ensuring your services stay up and running even when traffic surges or a server goes down.

Common Layer 4 use cases

I’d definitely recommend considering Layer 4 load balancing in the following scenarios:

Layer 4 DR mode use cases

- Medical imaging applications: Layer 4 DR mode is an ideal load balancing method for scenarios like medical imaging applications, where request traffic is small in size while reply traffic is very large.

- Video streaming applications: Layer 4 DR mode also works extremely well for video streaming applications due to its unrivalled performance.

Here’s a really nice demonstration of what happens when you do and don’t load balance a video streaming application with Layer 4 DR mode:

Layer 4 NAT mode use cases

- Web servers: Layer 4 NAT works perfectly for simply spreading HTTP and HTTPS traffic among several web servers. It's a great fit when you don't need advanced features like maintaining specific user sessions or routing based on the content of the web request.

- Mail servers: Layer 4 NAT is ideal for distributing incoming email traffic (like SMTP, POP3, and IMAP) across several mail servers. This ensures your email services stay available even if a server goes down, as it efficiently handles these standard TCP-based protocols.

- Streaming applications that can’t use Layer 4 DR: While Layer 4 Direct Routing (DR) offers faster performance for high-throughput streaming, NAT is a perfectly viable alternative when DR's specific network setup isn't feasible, for example when your backend servers are isolated on different subnets.

While Layer 4 Direct Routing (DR) offers faster performance for high-throughput streaming, NAT is a perfectly viable alternative when DR's specific network setup isn't feasible, for example when your backend servers are isolated on different subnets.

Layer 4 SNAT mode use cases

- Cloud deployments: Cloud load balancers typically use SNAT (or a similar proxy) by default. That's because the way their virtual networks are set up often makes Direct Routing difficult or impossible. SNAT neatly solves this by ensuring the return traffic flows correctly without needing tricky routing changes on each individual virtual machine.

- Layer 4 SNAT mode can also be used to load balance web servers, mail servers, and streaming applications that can’t use Layer 4 DR (as explained above).

The difference between choosing Layer 4 NAT versus SNAT is an architectural one. With Layer 4 NAT, the load balancer is the default gateway. With Layer 4 SNAT, it isn’t.

How to configure Layer 4 load balancing

The configuration steps will vary depending on the mode used and the application being load balanced, but here are some example use cases with step-by-step configuration and deployment instructions.

How to configure and deploy Layer 4 DR mode

Example: Layer 4 DR mode

- How to load balance Fiserv DNAConnect with Layer 4 DR mode

- How to load balance Planmeca Romexis with Layer 4 DR mode

Example: Layer 4 DR mode OR Layer 7 SNAT

- How to load balance Datacore Swarm Gateway with Layer 4 DR mode or Layer 7 SNAT

- How to load balance RabbitMQ with Layer 4 DR mode or Layer 7 SNAT

- How to load balance Papercut with Layer 4 DR mode or Layer 7 SNAT

- How to load balance Evertz Mediator-X with Layer 4 DR mode or Layer 7 SNAT

- How to load balance Leostream with Layer 4 DR mode or Layer 4 NAT

Example: Layer 4 DR mode AND Layer 7 SNAT

- How to load balance GE HealthCare Centricity PACS with Layer 4 DR and Layer 7 SNAT

- How to load balance GE Datalogue with Layer 4 DR AND Layer 7 SNAT

How to configure and deploy Layer 4 NAT mode

Example: Layer 4 NAT mode

How to configure and deploy Layer 4 SNAT mode

Example: Layer 4 SNAT mode

- How to load balance Access Rio with Layer 4 SNAT mode

- How to load balance Laurel Bridge Compass with Layer 4 SNAT mode

- How to load balance HP Anyware with Layer 4 SNAT mode

- How to load balance Verint Workforce Optimization with Layer 4 SNAT mode

Example: Layer 4 SNAT AND Layer DR mode

Example: Layer 4 SNAT OR Layer 4 DR mode

Pairing Layer 4 load balancing with Layer 7

Layer 4 DR mode also works great in tandem with Layer 7 load balancing.

For example, an inexpensive pair of load balancers in Layer 4 DR mode can be used for maximum throughput and transparency alongside some more mid to high-end load balancers at Layer 7. This means the Layer 4 load balancer can handle the SSL offload at a much more affordable price than simply buying a big box.

Another example of a two-tiered load balancing model might be to serve far greater volumes of traffic than would otherwise be possible. In this instance, an initial tier of Layer 4 DR load balancers distributes inbound traffic across a second tier of Layer 7, proxy-based load balancers. Splitting up the traffic in this way allows the more computationally expensive work of the proxy load balancers to be spread across multiple nodes. The whole solution can be built on commodity hardware and scaled horizontally over time to meet changing needs.

For more on multi-tired load balancing, check out this talk by the amazing Joe Williams from GitHub which explains how to use Layer 4 DR with tunnelling the front end. It’s a great example of the clever things that can be done using DSR, especially when the underlying infrastructure can be optimized and tinkered with.

Conclusion

Layer 4 Direct Return (DR) sounds awesome – it’s fast and simple, and we love it! But if you’ve read this far, I’d like you to pause and take on board that as a load-balancing expert, I see many cases where DR is NOT the perfect choice. So I’d strongly urge you to think about and consider the available alternatives!

If you’re at all unsure what load balancing method to use, drop us a message and we can advise.

Remember, if you have legacy infrastructure there may be constraints that prevent you from deploying your load balancers in the recommended mode. But that doesn’t mean there aren’t workarounds available. That’s what we’re here for.

More on other load balancing methods

- When and how to use Global Server Load Balancing (GSLB)

- Layer 4 vs Layer 7 load balancing - we still love DSR, but…

- Compare Layer 4, 7, and GSLB techniques

Need help with Layer 4?

Speak to a technical expert