The first question we always ask our customers is:

"Are you looking for performance, reliability, maintainability — or all three?"

Because, at the end of the day, without clear objectives and required outcomes, it's nigh-on impossible to determine the right load balancing solution for your specific problem. But once those questions have been answered, where do you go next? How do you determine which load balancing technique is the right fit? What are the pros and cons of each? And how and when might they be used?

Load balancing fundamentals

Before we go any further, it's important to understand the fundamentals of the three main techniques to determine the most appropriate load balancing solution for the problem you are trying to solve. While at a high level Layer 4 may be great for performance, and Layer 7 super flexible, things are a lot more nuanced than that, as you will see.

Load balancing is a term used to describe a device's ability to effectively and efficiently distribute traffic across a group of servers, or a server farm (otherwise known as backend servers). The appliances themselves can be deployed as hardware, virtual (e.g. VMware, HyperV, KVM, Nutanix, and XEN), or in the cloud (e.g. Amazon, Azure, and GCP). Utilizing a clustered pair, load balancers are able to guarantee a highly available and responsive application by preventing servers from becoming overloaded, thus avoiding a single point of failure.

Typically there are three core load balancing techniques that can be employed: Layer 4, Layer 7 (references to the OSI model layer), and Global Server Load Balancing (GSLB). And each method has its own advantages and drawbacks.

To be honest, using the OSI model references for load balancing is pretty confusing. All load balancers and methods actually act at multiple Layers from 2 to 7 because they all handle low-level routing and high-level application health checks. But tradition is a powerful thing, so let's stick with the official terminology and unpick the specific differences...

Layer 4 load balancing

At Layer 4 the load balancer acts just like a firewall. It routes connections between servers and clients based on simple IP address and port information, combined with health checks.

This Transport Layer manages end-to-end connections. Layer 4 handles flow and error control, data communication between devices, systems, and hosts. Data here is segmented before being sent to Layer 3, then reassembled for the Layer 5 session data.

Flow control cleverly determines data transmission speed and target quantities for sending, ensuring that senders with faster connections don't overpower receivers with slower connections. Protocols include TCP and UDP port numbers (while Layer 3 is where IP addresses work).

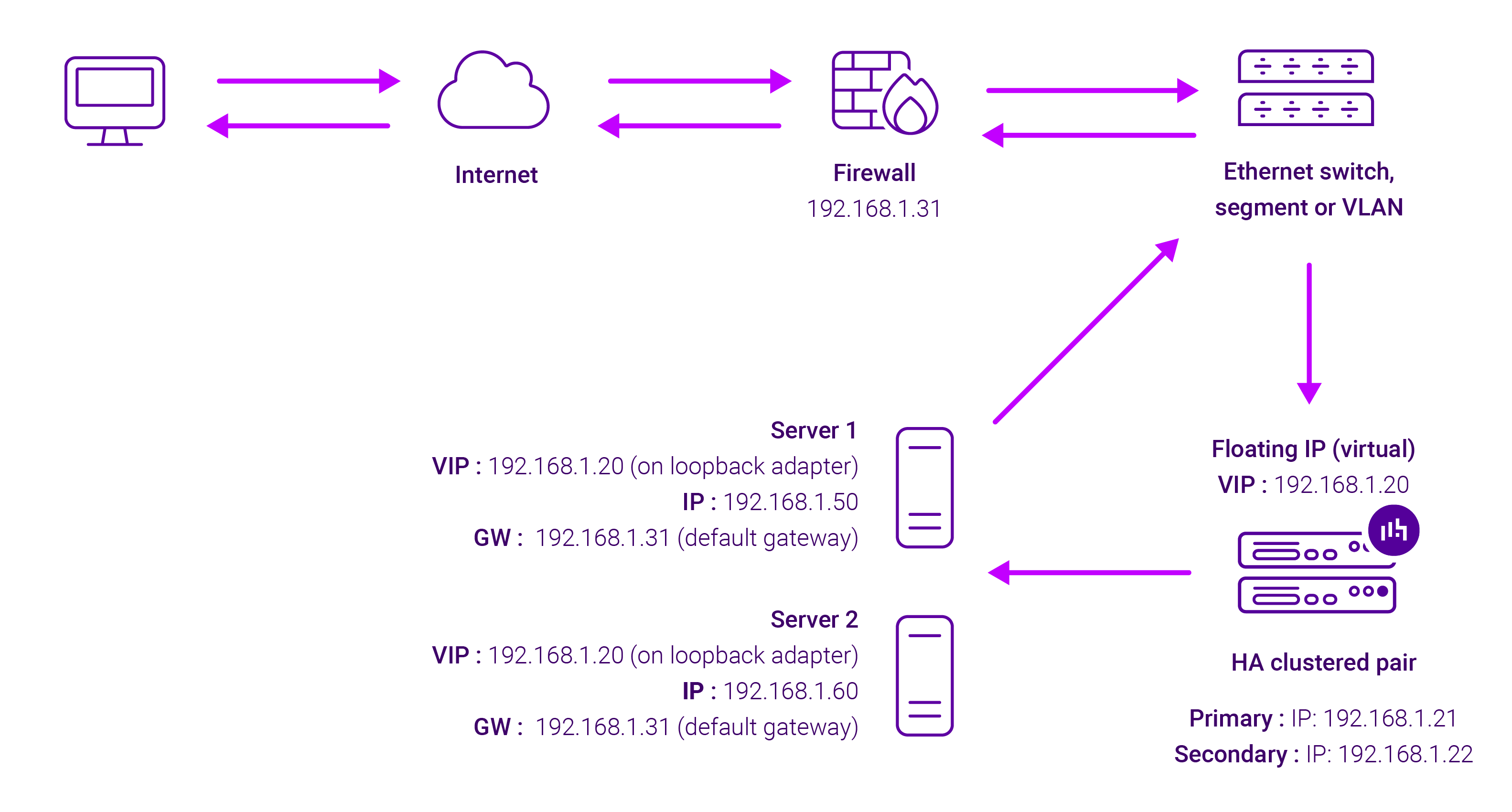

There are a number of different types of Layer 4 load balancing (e.g. Layer 4 DR mode, Layer 4 NAT mode, Layer 4 SNAT mode, and Layer 4 TUN). An example of a Layer 4 DR mode deployment is illustrated below:

Diagram 1: Layer 4 DR Mode

Note Kemp, Brocade, Barracuda, and A10 Networks call this Direct Server Return and F5 call it N-Path.

The most important thing to know about Layer 4 load balancing is that the application servers do all the work, and they establish a direct connection with the clients which is fast, transparent, and easy to understand. But they need to be secure, scalable, and handle all traffic requests as expected. If your application is a bit old and cranky and doesn't handle users bouncing between servers in the cluster correctly, then you might need some of the features Layer 7 load balancers can offer you. But before we get there, how do the various Layer 4 modes stack up?

What are the pros and cons of Layer 4 load balancing?

Layer 7 load balancing

Layer 7 acts as a reverse proxy, which means it is capable of retaining two TCP connections (one with the server, and one with the client).

This Application Layer interacts directly with the end-user. Layer 7 is application-aware and supports communications for end-user processes and applications, and the presentation of data for user-facing software applications (e.g. web browsers, email communications). Note, this is not where the client software applications themselves actually sit — instead, it establishes connections with applications through the lower Layers to present data in a way that is meaningful to the end user. Protocols include HTTP, FTP, SMTP, and SNMP.

At Layer 7, the load balancer has more information to make intelligent load balancing decisions, as information about upper-level protocols is available, such as FTP, HTTP, HTTPS, DNS, RDP, etc.

However, Layer 7 also has a bigger security risk profile, and uses more resources than Layer 4 load balancing. But given today's hardware, performance is not really an issue anymore. So Layer 7 is usually the default choice for most configurations I come across, it generally works first-time — because servers can be in any routable network. However, I personally often use it in combination with Layer 4 and GSLB, in order to leverage the best part of each technique for specific parts of the application.

What are the pros and cons of Layer 7 load balancing?

Next we move on to a solution that allows you to load balance across distributed servers...

Global Service Load Balancing (GSLB)

Global Server Load Balancing (GSLB) refers to the distribution of traffic across server resources located in multiple locations, such as multiple data centers. In other words, it is load balancing with a smart DNS!

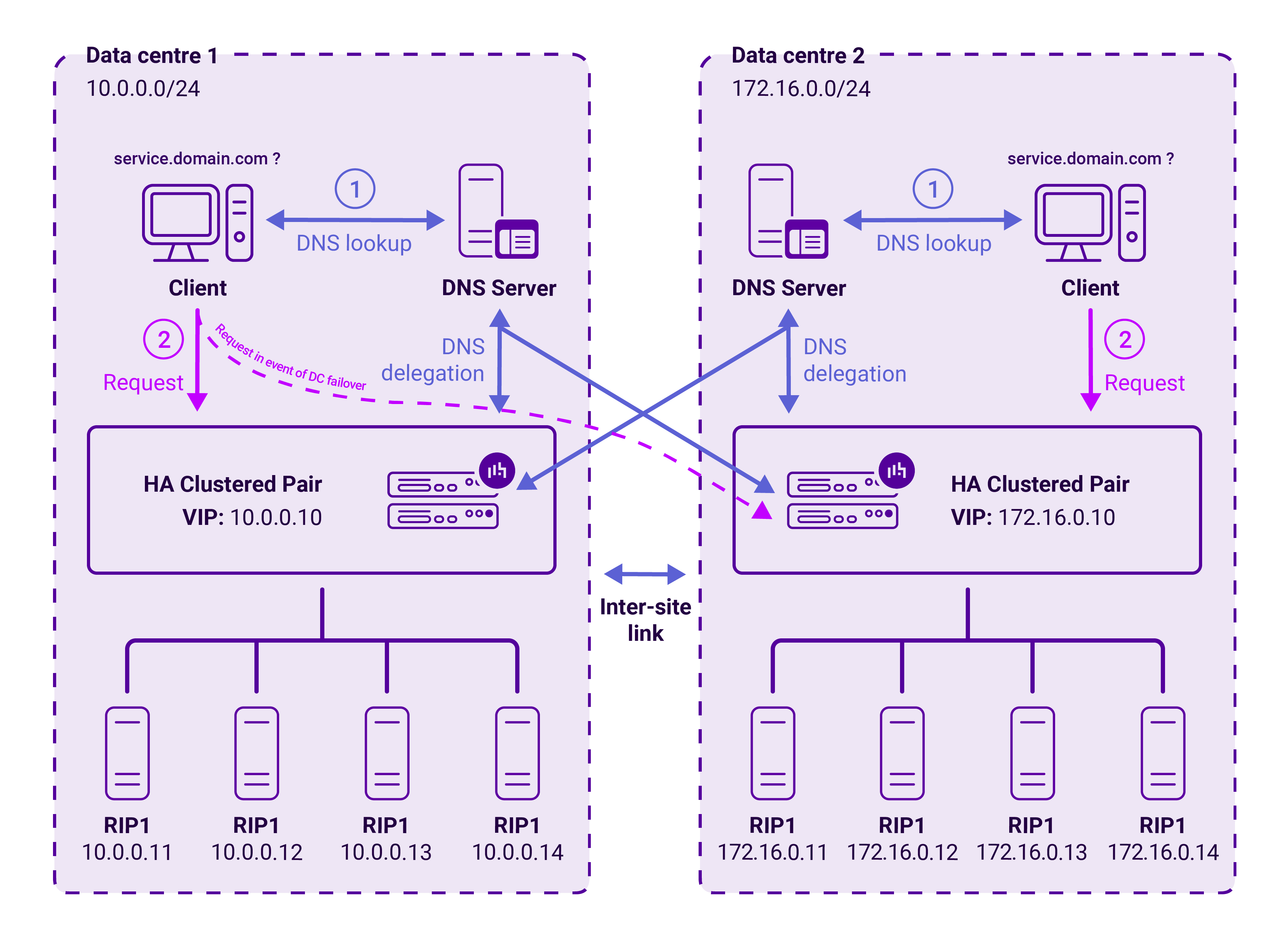

An example architecture is illustrated below:

Here, in the event of an issue or maintenance at one data center, all user traffic is directed seamlessly to the remaining data center, ensuring the high availability of the storage systems.

External user traffic is distributed across both active data centers, based on a real-time, intelligent assessment of the nodes that will deliver the best performance for each user.

Internal user traffic is kept on the local site for performance reasons unless a failure occurs, and then it is instantly and seamlessly redirected to another site. This is called topology-based routing and it is based on the difference in subnet allocation between data centers.

What is the difference between GSLB and Cloudflare?

GSLB as a service such as Cloudflare is incredibly popular and powerful. In fact, I've seen several companies use both Cloudflare for External traffic and our GSLB for internal traffic. Because an external service has little or no access to applications inside your network, if you're running an application across multiple data centers you will normally need two GSLBs and two load balancers per site (although they can easily be combined into one appliance).

What are the pros and cons of Global Server Load Balancing (GSLB)?

For more on GSLB, check out our comprehensive guide:

Putting it all together...

At the end of the day, Layer 7 is typically the initial 'go-to', offering an easy-to-use, flexible full application reverse proxy. However, sometimes I also recommend Layer 4 if you need high throughput, network simplicity, and transparency. And Global Server Load Balancing (GSLB) enables you to distribute internet or corporate network traffic across servers in multiple locations, anywhere in the world with intelligent application level control.

Nevertheless, the most important takeaway here is that all three techniques work really well in combination with each other — in fact, they work best together. But, whatever your needs, feel free to speak to our technical experts who can take you through all this so you can make an informed decision about what's right for your specific use case.